Run Ollama on Windows: A Comprehensive Guide (with Images)

Hey there! Want to run cool large language models (LLMs) on your own Windows computer, even without an internet connection? Then you definitely need to check out Ollama!

Don't worry if you're not a tech expert; this guide is tailored just for you. We'll walk you through the download, installation, and configuration process step by step, and show you how to verify that it's working correctly. You'll be up and running with local AI large language models in no time!

What will we be doing? Simply put, these steps:

- "Invite" it from the official website (download the installer)

- Give it a home and set some "rules" (configure environment variables, especially where to store models)

- Start it up and let it get to "work" (run the Ollama service)

- Check everything to make sure it's OK (verify the installation)

Ready? Let's Go!

Step 1: Download and Install Ollama

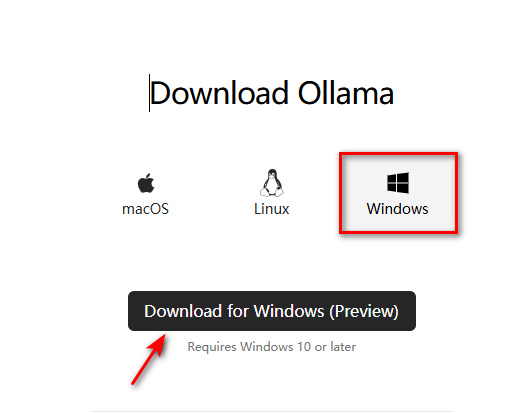

Head straight to the official website for the download:

- Ollama Download: https://ollama.com/download

- Want to learn more? Check out the homepage: https://ollama.com

- For the more adventurous, check out the GitHub repository: https://github.com/ollama/ollama/

On the download page, the big "Download for Windows" button should be pretty obvious. Click it! Your browser will start downloading a file called

OllamaSetup.exe.

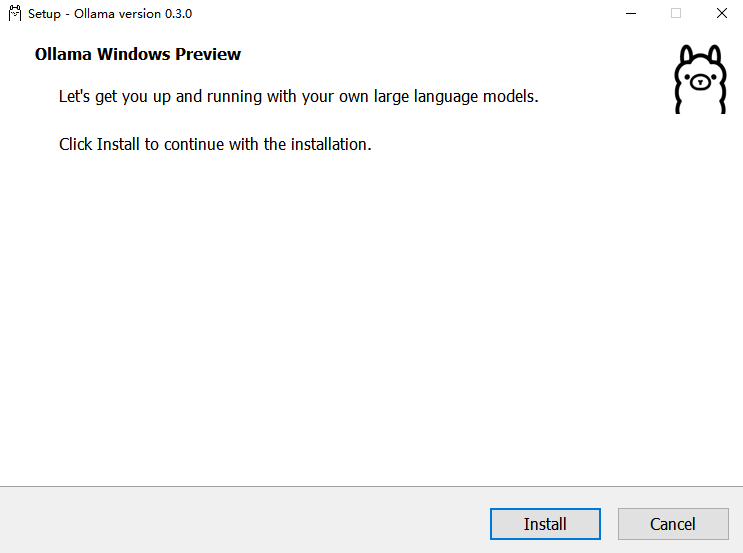

"Brainless" Installation: Once the download is complete, find

OllamaSetup.exeand double-click it. In the window that pops up, just click "Install". Then, patiently wait for the installation progress bar to finish. It will automatically prepare everything you need.

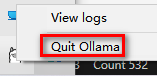

Installation Complete - It Might Start Automatically: After installation, Ollama will usually start quietly in the background. You can find the Ollama icon in the taskbar notification area (where the time and network icons are) in the lower right corner of your screen. Right-click on it to select "Quit Ollama" to close it, or click "Logs" to see its runtime log.

Step 2: Configure Environment Variables (This Step is Crucial!)

After installing Ollama, we strongly recommend that you take a few minutes to configure the environment variables. This will allow you to better "command" Ollama, for example, to tell it where the model files should be stored.

What are "environment variables"?

Simply put, they are like "sticky notes" attached to your computer system, containing configuration information. Programs (like Ollama) can read these "notes" to know how to run, where to put things, and so on.

Some Commonly Used "Sticky Notes" for Ollama:

| Variable Name (Note Title) | Explanation (Note Content) | Our Recommendation |

|---|---|---|

OLLAMA_MODELS | Location of the model repository! By default, it puts the downloaded models in your user folder on the C drive (like C:\Users\YourUsername\.ollama\models). We strongly recommend moving it to another drive (like D or E)! Otherwise, the C drive will run out of space as the models get larger! | For example, put it in E:\ollama\models (remember to create the E:\ollama folder first) |

OLLAMA_HOST | Who can access Ollama? The default is 127.0.0.1, which means only your own computer can use it. If you want other devices on your local network (such as phones, tablets, or other computers) to connect to it, change it to 0.0.0.0. | Set to 0.0.0.0 if you want local network sharing, otherwise the default is fine |

OLLAMA_PORT | The "door number" of the Ollama service. The default is 11434. If this "door number" is occupied by another program, Ollama will not start. You can change it to an unused number (such as 8080). | Default 11434, change it if there is a conflict |

OLLAMA_ORIGINS | Which "visitors" (HTTP clients) are allowed to knock on the door. Generally, you don't need to set this specifically. To save trouble, you can set it to *, which means everyone is welcome. | Set to * for local use |

OLLAMA_KEEP_ALIVE | How long can the model "standby" after being loaded into memory? The default is 5m (5 minutes). If no one uses it after that time, the model will be unloaded from memory and reloaded next time. You can set it to a pure number (in seconds), 0 to "leave" immediately after use, or a negative number to "linger". Want faster response? We recommend setting it longer, like 24h (24 hours). | Set to 24h for speed |

OLLAMA_NUM_PARALLEL | How many requests can be processed simultaneously. The default is 1. You can increase it appropriately if your computer configuration is good. | The default is generally sufficient depending on your computer's performance |

OLLAMA_MAX_LOADED_MODELS | The maximum number of models that can "live" in memory at the same time. The default is 1. If you frequently switch between different models, you can increase this, but it will consume more memory. | Adjust if you have enough memory and need it |

OLLAMA_DEBUG | Do you want to see super detailed runtime logs? You can set it to 1 when debugging. | Set to 1 when you need to troubleshoot problems |

OLLAMA_MAX_QUEUE | The maximum length of the request queue. The default is 512. Generally, you don't need to change it. | Default is fine |

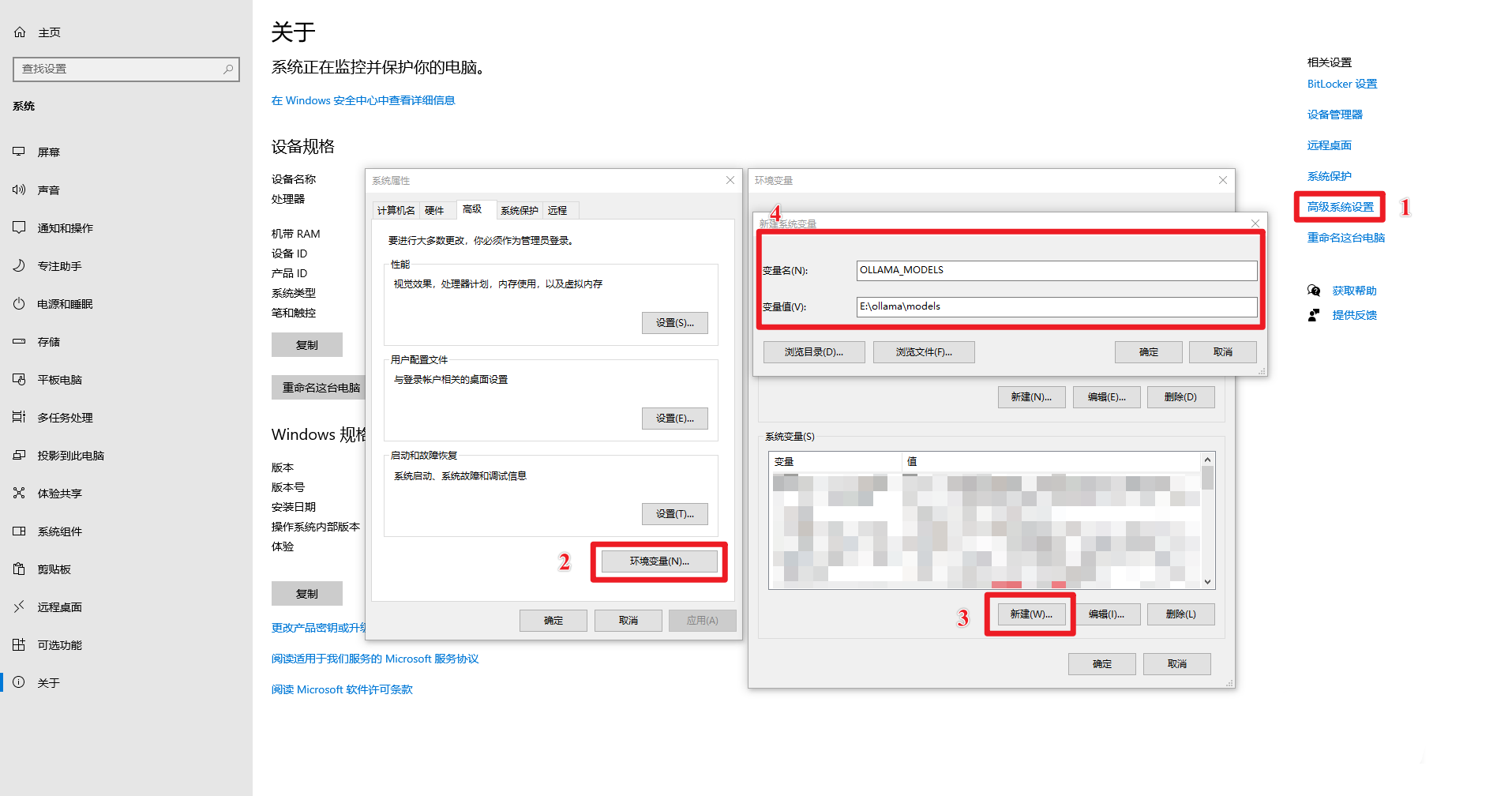

How to set these "sticky notes" (environment variables)?

Find the Settings:

- The fastest way: Search for "environment variables" in the Windows search box, then click "Edit the system environment variables".

- Or: Right-click "This PC" -> "Properties" -> "Advanced system settings" -> "Environment Variables".

- Or: Control Panel -> System and Security -> System -> Advanced system settings -> Environment Variables.

- You can also: Press

Win + R, entersysdm.cpl, press Enter, go to the "Advanced" tab, and click "Environment Variables".

Create a New "Sticky Note": In the "Environment Variables" window that pops up, find the "System variables" area below (if you only want the settings to apply to the current user, you can select the "User variables" area above), and click "New".

Write the "Note Title" and "Content":

- Enter the name from the table above in "Variable name", such as

OLLAMA_MODELS. - Enter the value you want in "Variable value", such as

E:\ollama\models. - Important Note: For path settings like

OLLAMA_MODELS, make sure that theE:\ollamafolder has already been created, or at least that theE:drive exists. Ollama may help you create themodelssubfolder, but to be safe, it's best to have the parent directory in place first.

- Enter the name from the table above in "Variable name", such as

Save the "Sticky Note": Click "OK".

Repeat Adding: If you need to set other environment variables (such as

OLLAMA_KEEP_ALIVE), repeat steps 2-4."OK" All the Way: Click "OK" to close all the settings windows you opened.

Make the Settings Take Effect: Restart Ollama (if it's running, exit and then start it again), or reopen a command prompt/PowerShell window. This will allow the new environment variables to be recognized.

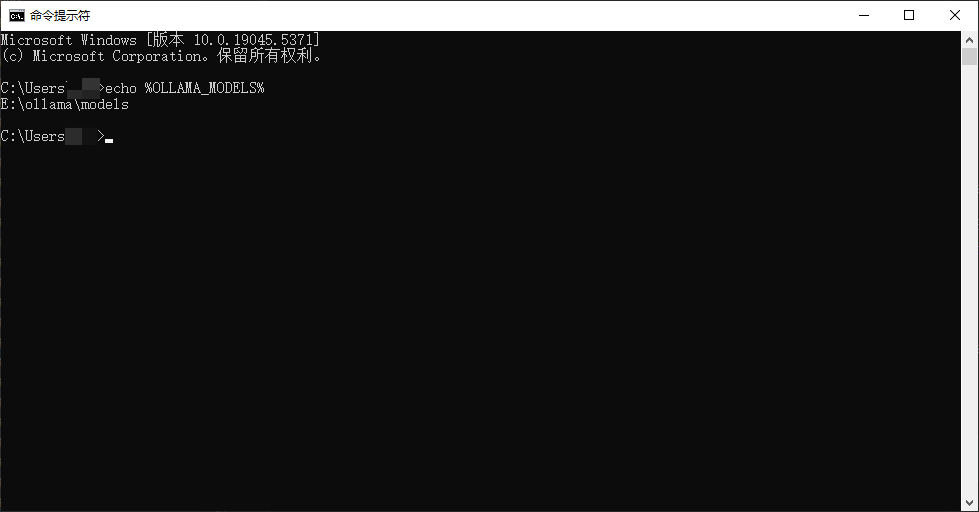

Check if the Settings are Correct:

Open Command Prompt (CMD) or PowerShell. (Remember to open a new one!)

Enter

echo %OLLAMA_MODELS%and press Enter. If the path you just set is displayed below (such asE:\ollama\models), then you're done!

Step 3: Start the Ollama Service

Although it may have started automatically after installation, we sometimes need to control it manually. Or, if you have disabled automatic startup, you need to start it manually.

Open Command Prompt (CMD) or PowerShell.

Enter

ollama serveand press Enter.bashollama serveHaving Trouble?

If you see an error like this:

Error: listen tcp 127.0.0.1:11434: bind: Only one usage of each socket address...This means that the "door number"

11434is already in use! The most common reason is that Ollama is set to start automatically when you install it, so it's already running in the background. When you manually start it again, there is a conflict.What to do?

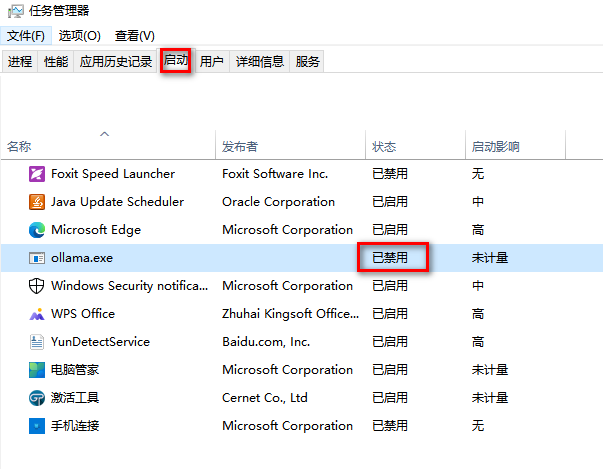

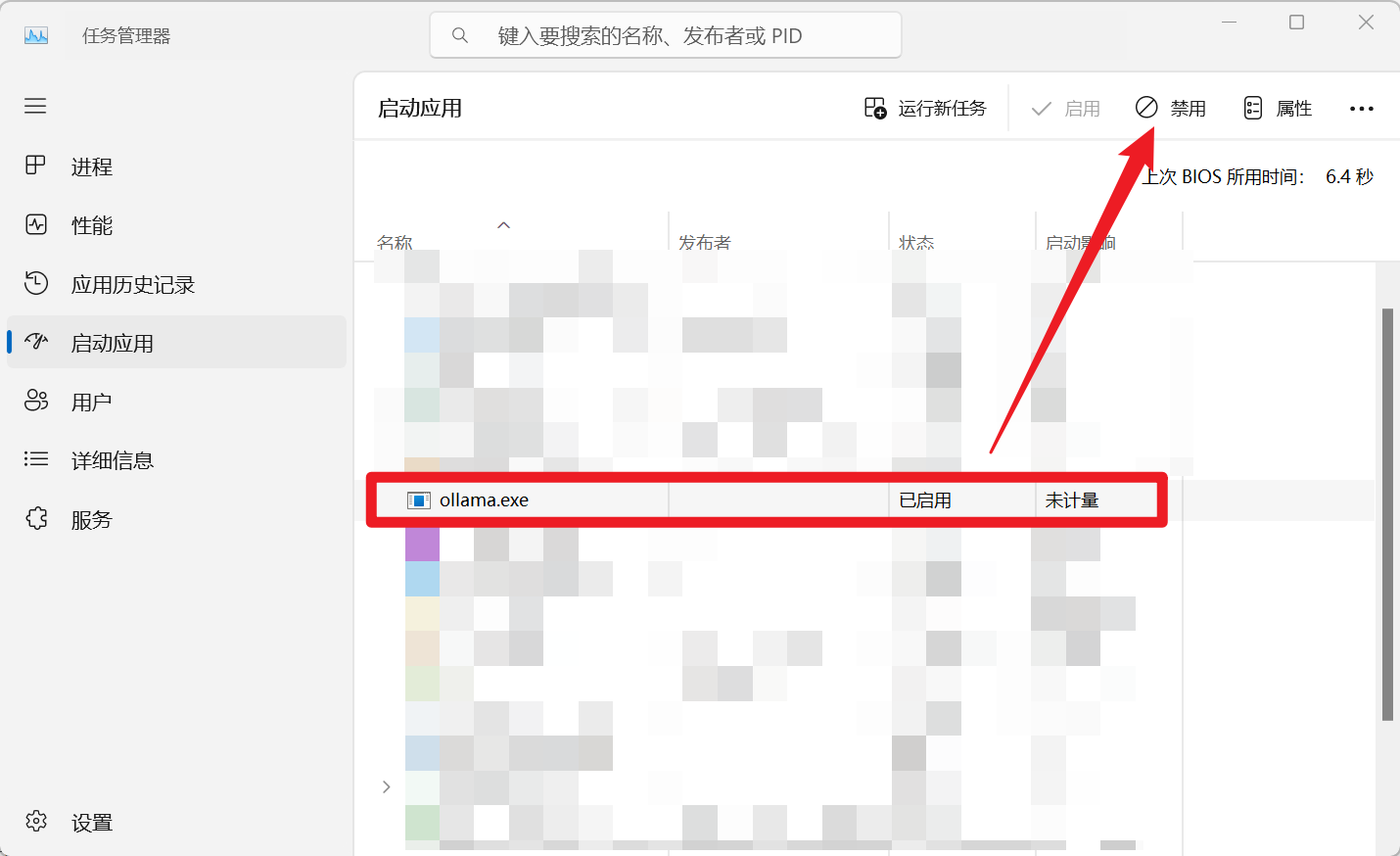

Method 1 (Recommended): Close the Ollama that is already running.

- Summon Task Manager by pressing

Ctrl + Shift + Esc. - If your Task Manager has a "Startup apps" or similar tab, find "Ollama" there, right-click on it, and select "Disable". This will prevent it from running automatically next time you start your computer. (Do this now, we will talk about it in detail later)

(This image may need to be updated to the Task Manager startup tab interface)

(This image may need to be updated to the Task Manager startup tab interface) - Switch to the "Processes" or "Details" tab, find all processes named

ollama.exe, select them, and click "End task" in the lower right corner.

- Summon Task Manager by pressing

Method 2 (Not Recommended Unless You Understand the Consequences): Give Ollama a new "door number".

- Follow the method in step 2 to set the

OLLAMA_PORTenvironment variable, giving it a port number that you are sure is not in use.

- Follow the method in step 2 to set the

After resolving the port conflict, try running the

ollama servecommand again. If there are no errors, it means the Ollama service has started successfully.

Make Sure It's Really "Listening":

Open another Command Prompt (CMD) or PowerShell.

Enter

netstat -aon | findstr 11434and press Enter. (If you changed the port, replace11434with the port number you changed it to)If you see output like

TCP 127.0.0.1:11434 ... LISTENING, that's correct! It means Ollama is waiting to receive commands on port11434.bashnetstat -aon | findstr 11434The output will look something like this:

TCP 127.0.0.1:11434 0.0.0.0:0 LISTENING 17556The last number (like

17556) is the Ollama process ID (PID).If you're curious, you can use

tasklist | findstr "17556"(replace the number with the PID you see) to see who this process is.bashtasklist | findstr "17556"You should be able to see the

ollama.exeinformation.

Step 4: Verify the Installation, Run a Model and Try It!

Open Command Prompt (CMD) or PowerShell.

Enter

ollama -hand press Enter. If a bunch of Ollama help text comes out, congratulations, the installation is basically successful!bashollama -hYou should see output similar to this:

Large language model runner Usage: ollama [flags] ollama [command] Available Commands: serve Start ollama create Create a model from a Modelfile show Show information for a model run Run a model pull Pull a model from a registry push Push a model to a registry list List models ps List running models cp Copy a model rm Remove a model help Help about any command ... (There may be more)

Time to make it do something! Run the first model:

Visit the Ollama Model Library and find a model that you like. For example,

llama3(Meta's new model, very popular) orqwen:7b(Alibaba's Qwen 7 billion parameter version).In the command prompt or PowerShell, enter

ollama run model name, for example:bashollama run llama3Or

bashollama run qwen:7bThen press Enter.

Ollama will automatically start downloading the model files (if you set

OLLAMA_MODELS, it will download to the folder you specified).- Be patient! The first time you run a model, you need to download it. The files are usually quite large (a few GB is normal), and the download speed depends on your internet speed. You can see the download progress bar.

Once the download is complete, the model will start, and you can chat with it directly in the command line! Ask it anything and see its reaction. Want to exit the chat? Press

Ctrl + D.

Step 5: Don't Want Automatic Startup? Turn It Off!

Ollama defaults to adding itself to the Windows startup items during installation. If you don't think it's necessary to have it run automatically every time you start your computer (for example, if you want to control it manually, or if you already have a lot of startup items), you can turn it off.

- As of the date of this article (approximately mid-2024), the cancellation method is as follows (the interface may vary slightly depending on the Windows version):

Summon Task Manager again (

Ctrl + Shift + Esc).Find the "Startup apps" (or just "Startup") tab.

Find "ollama.exe" (or just "Ollama Application") in the list.

Right-click on it and select "Disable". Done! It won't come out automatically next time you start your computer.

(This image is the Task Manager startup tab interface and is very appropriate)

(This image is the Task Manager startup tab interface and is very appropriate)

Stuck? Check Out These Common Issues

- Ollama Won't Start?

- The most likely reason: The port is in use (see the solution in step 3).

- Check if the environment variables (step 2) are set correctly, and make sure you haven't made any mistakes in the paths.

- If all else fails, check the Ollama logs (right-click the taskbar icon -> Logs), which may contain clues.

- Model Downloads are Extremely Slow?

- Check if your network is not performing well.

- (Advanced Tip) You can try configuring a domestic mirror source, but this is a bit more complex and beginners can ignore it for now.

- Ollama Feels Laggy When Running?

- See if your computer configuration is a bit weak. Running large models is quite memory and graphics card intensive (if using GPU acceleration).

- Try setting

OLLAMA_KEEP_ALIVElonger (e.g.,24h) to avoid repeated loading and unloading of the model. - Are too many models running at the same time? Or are there too many concurrent requests? (Check the

OLLAMA_MAX_LOADED_MODELSandOLLAMA_NUM_PARALLELsettings)

Alright! At this point, you should have successfully installed and configured Ollama on Windows, and even chatted with your first AI model! Doesn't it feel rewarding?