Spark-TTS is a recently popular open-source voice cloning project developed collaboratively by institutions like Hong Kong University of Science and Technology, Northwestern Polytechnical University, and Shanghai Jiao Tong University. Based on local testing, its performance is comparable to F5-TTS.

Spark-TTS supports voice cloning in both Chinese and English, and the installation process is straightforward. This guide details how to install and deploy it, and modify it to be compatible with the F5-TTS API interface, enabling direct use in pyVideoTrans software through the F5-TTS dubbing channel.

Prerequisites: Ensure Python 3.10, 3.11, or 3.12 is installed.

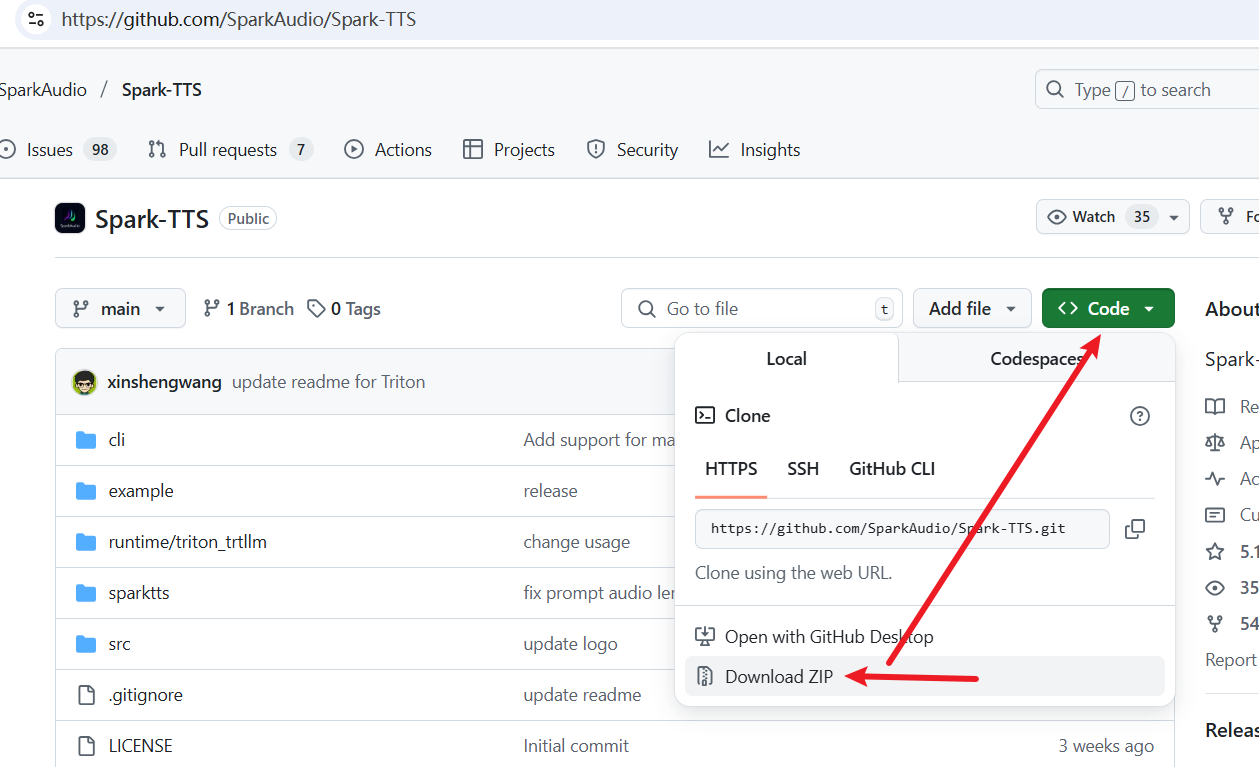

1. Download Spark-TTS Source Code

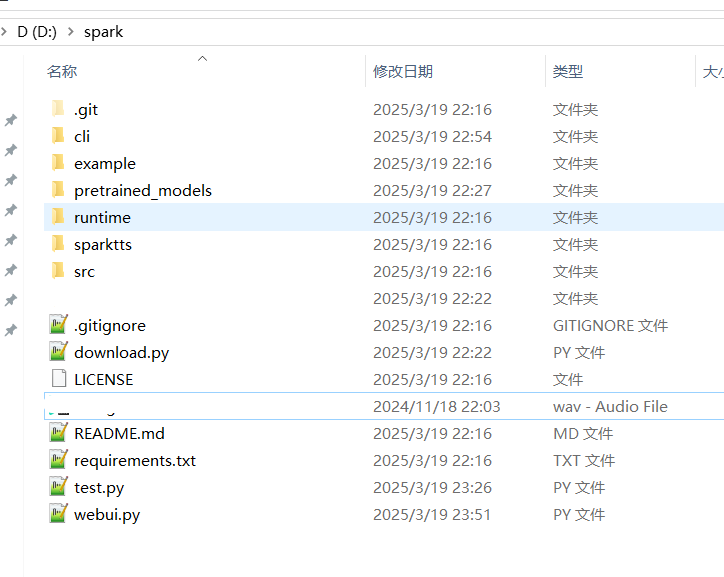

First, create a folder named with English letters or numbers on a non-system drive, such as D:/spark. Using a non-system drive and avoiding Chinese characters helps prevent potential errors related to permissions or encoding.

Then, visit the official Spark-TTS repository: https://github.com/SparkAudio/Spark-TTS

As shown below, click to download the source code ZIP file:

After downloading, extract the contents and copy all files and folders into the D:/spark folder. The directory structure should look like this:

2. Create a Virtual Environment and Install Dependencies

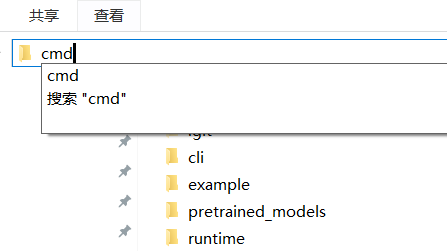

- Create a Virtual Environment

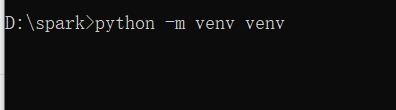

In the folder's address bar, type cmd and press Enter. In the opened terminal window, execute the following command:

python -m venv venvAs shown:

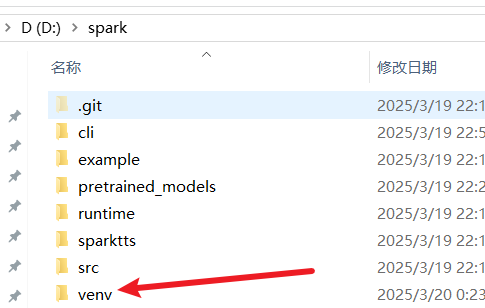

After execution, a venv folder will appear in the D:/spark directory:

Note: If you see an error like

python is not recognized as an internal or external command, Python may not be installed or added to the system environment variables. Refer to relevant guides to install Python.

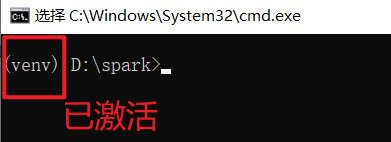

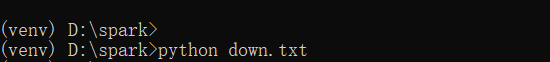

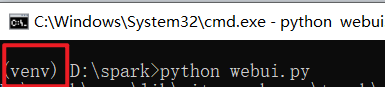

Next, run venv\scripts\activate to activate the virtual environment. Once activated, (venv) will appear at the beginning of the terminal line, indicating success. All subsequent commands must be run in this activated environment; always check for (venv) before proceeding.

- Install Dependencies

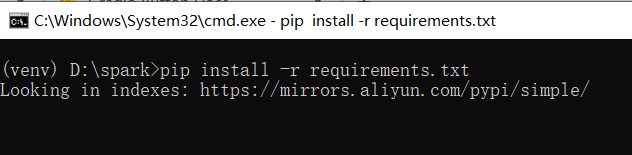

In the activated virtual environment, continue in the terminal and execute the following command to install all dependencies:

pip install -r requirements.txtThe installation may take some time; please wait patiently.

3. Download Models

Open-source AI models are often hosted on Hugging Face (huggingface.co). Since this site is blocked in some regions, you need proper internet access to download the models. Ensure your system proxy is configured correctly.

In the current directory D:/spark, create a text file named down.txt, copy and paste the following code into it, and save:

from huggingface_hub import snapshot_download

snapshot_download("SparkAudio/Spark-TTS-0.5B", local_dir="pretrained_models/Spark-TTS-0.5B")

print('Download complete')Then, in the activated virtual environment terminal, execute:

python down.txtMake sure (venv) is visible at the command line:

Wait for the terminal to indicate the download is complete.

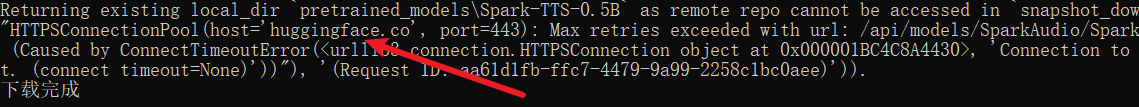

If you see an output like the following, it indicates a network connection error, possibly due to incorrect proxy configuration:

Returning existing local_dir `pretrained_models\Spark-TTS-0.5B` as remote repo cannot be accessed in `snapshot_download` ((MaxRetryError("HTTPSConnectionPool(host='huggingface.co', port=443): Max retries exceeded with url: /api/models/SparkAudio/Spark-TTS-0.5B/revision/main (Caused by ConnectTimeoutError(<urllib3.connection.HTTPSConnection object at 0x000001BC4C8A4430>, 'Connection to huggingface.co timed out. (connect timeout=None)'))"), '(Request ID: aa61d1fb-ffc7-4479-9a99-2258c1bc0aee)')).

4. Launch the Web Interface

Once the model is downloaded, you can start and open the web interface.

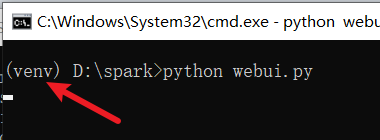

In the activated virtual environment terminal, execute:

python webui.py

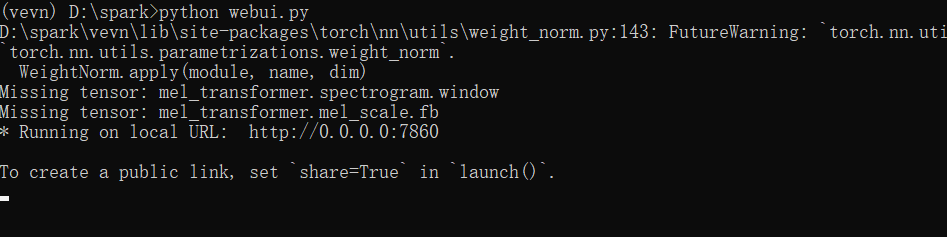

Wait until you see the following message, indicating startup is complete:

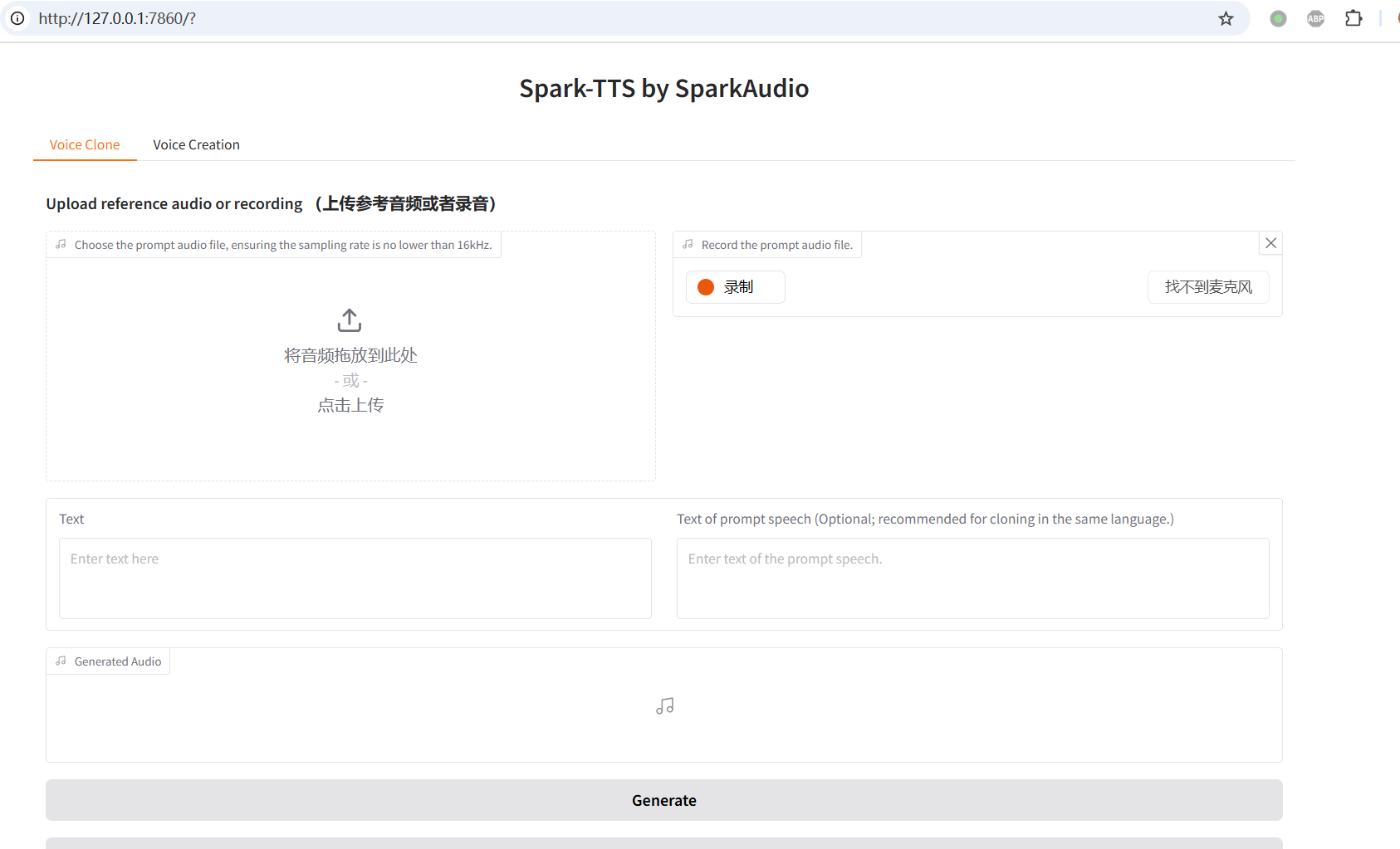

Now, open your browser and go to http://127.0.0.1:7860. The web interface will look like this:

5. Test Voice Cloning

As shown below, select an audio file for cloning (3-10 seconds long, clear pronunciation, clean background).

Then, enter the corresponding text in the right Text of prompt speech field, input the desired text for generation on the left, and click the Generate button at the bottom to start.

After execution, the result will appear as shown.

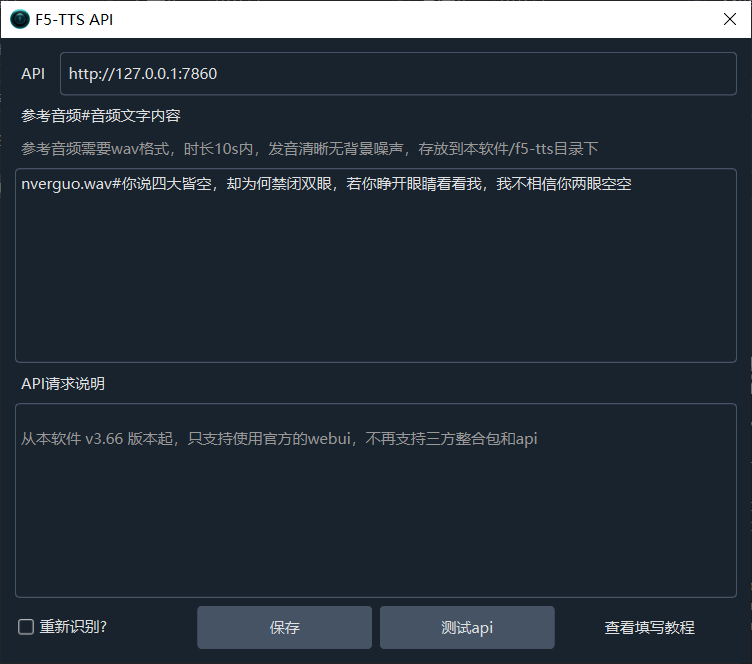

6. Use in pyVideoTrans Software

Spark-TTS is very similar to F5-TTS. With a simple modification, it can be used directly in pyVideoTrans via the F5-TTS dubbing channel. If you're not comfortable modifying the code, you can download the pre-modified version and overwrite the

webui.pyfile from: https://pvt9.com/spark-use-f5-webui.zip

- Open the

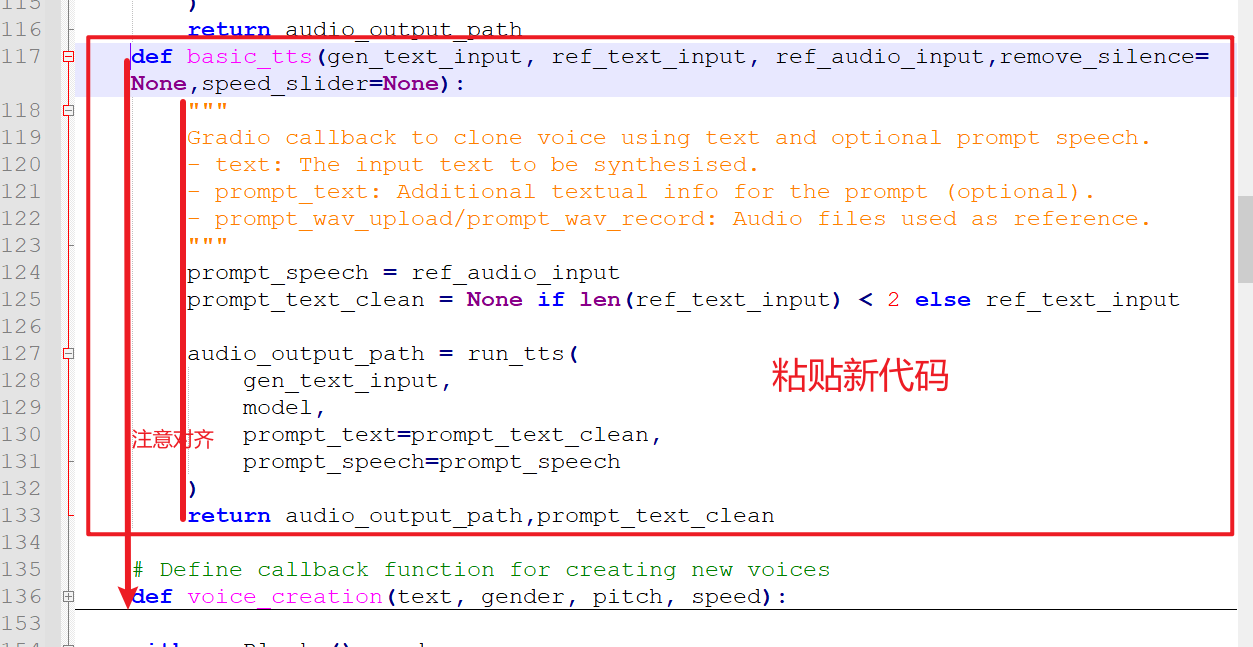

webui.pyfile and paste the following code above approximately line 135:

def basic_tts(gen_text_input, ref_text_input, ref_audio_input,remove_silence=None,speed_slider=None):

"""

Gradio callback to clone voice using text and optional prompt speech.

- text: The input text to be synthesised.

- prompt_text: Additional textual info for the prompt (optional).

- prompt_wav_upload/prompt_wav_record: Audio files used as reference.

"""

prompt_speech = ref_audio_input

prompt_text_clean = None if len(ref_text_input) < 2 else ref_text_input

audio_output_path = run_tts(

gen_text_input,

model,

prompt_text=prompt_text_clean,

prompt_speech=prompt_speech

)

return audio_output_path,prompt_text_clean

Important: Python code uses spaces for indentation; misalignment will cause errors. To avoid issues, do not use Notepad; instead, use a professional code editor like Notepad++ or VSCode.

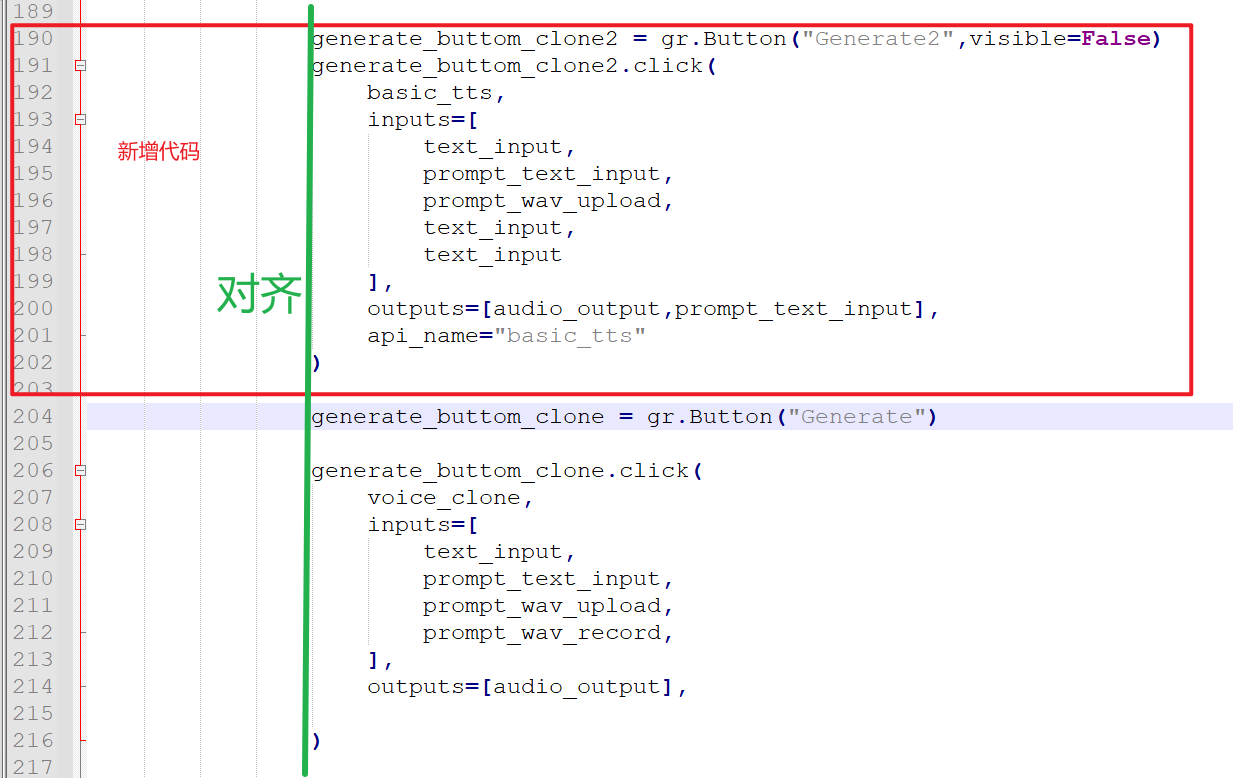

- Then, find the code

generate_buttom_clone = gr.Button("Generate")around line 190. Paste the following code above it, ensuring proper alignment:

generate_buttom_clone2 = gr.Button("Generate2",visible=False)

generate_buttom_clone2.click(

basic_tts,

inputs=[

text_input,

prompt_text_input,

prompt_wav_upload,

text_input,

text_input

],

outputs=[audio_output,prompt_text_input],

api_name="basic_tts"

)

- Save the file and restart

webui.py:

python webui.py

- Enter the address

http://127.0.0.1:7860in pyVideoTrans under "Menu" -> "TTS Settings" -> "F5-TTS" API address to start using it. The reference audio location and input method are the same as for F5-TTS.