Three-Step Reflection Method for Translating SRT Subtitles

Andrew Ng's "Three-Step Reflection Translation Method" is highly effective. It improves translation quality by having the model self-review the translation results and propose enhancements. However, applying this method directly to SRT subtitle translation presents some challenges.

Special Requirements of SRT Subtitle Format

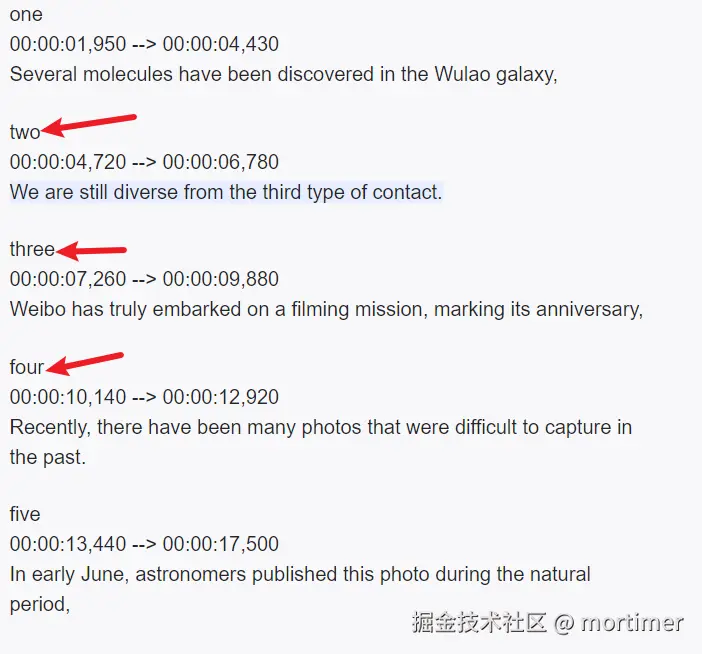

The SRT subtitle format has strict requirements:

- First line: Subtitle number (numeric)

- Second line: Two timestamps connected by

-->in the formathours:minutes:seconds,3-digit milliseconds - Third line and beyond: Subtitle text content

Subtitles are separated by two blank lines.

Example:

1

00:00:01,950 --> 00:00:04,430

Several molecules have been discovered in the five-star system,

2

00:00:04,720 --> 00:00:06,780

We are still multiple universes away from third-type contact.

3

00:00:07,260 --> 00:00:09,880

Weibo has been carrying out filming missions for years now,

4

00:00:10,140 --> 00:00:12,920

Many previously difficult-to-capture photos have been transmitted recently.Common Issues in SRT Translation

When using AI to translate SRT subtitles, the following problems may occur:

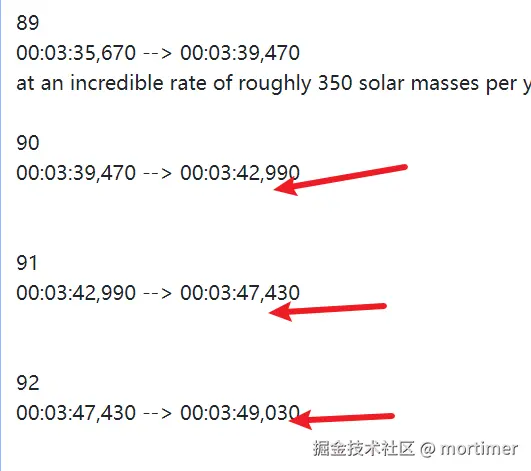

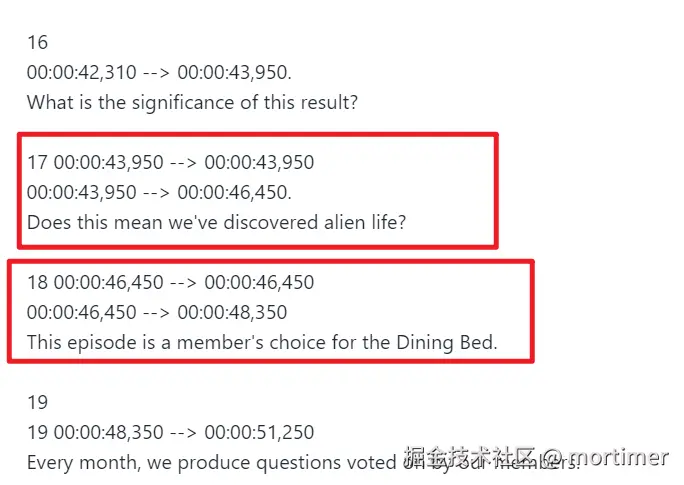

- Format Errors:

- Missing subtitle numbers or duplicate timestamps

- Translating English punctuation in timestamps to Chinese punctuation

- Merging adjacent subtitle text into one line, especially when the preceding and following sentences form a complete sentence grammatically

- Translation Quality Issues:

- Translation errors often occur even with strict prompt constraints.

Common Error Examples:

- Subtitle Text Merging Causing Blank Lines

- Format Confusion

- Subtitle Number Translated

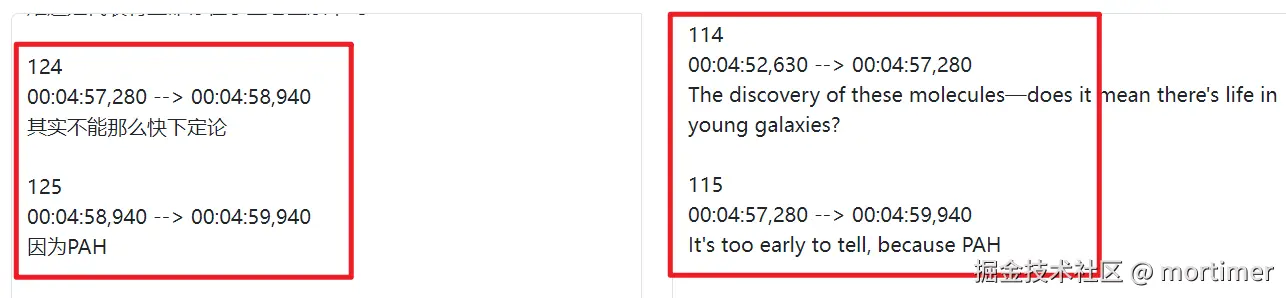

- Mismatch in Original and Result Subtitle Count

As mentioned above, when two consecutive subtitles are grammatically part of one sentence, they are likely to be translated as a single subtitle, resulting in fewer subtitles in the output.

Format errors directly cause subsequent processes that rely on the SRT file to fail. Different models exhibit varying errors and error probabilities. Generally, more intelligent models are more likely to return valid, compliant content, while locally deployed smaller models are almost unusable.

However, given the improvement in translation quality with the three-step reflection method, we still attempted it. We ultimately chose gemini-1.5-flash for a small trial, mainly because it is intelligent enough and free, aside from frequent rate limits.

Prompt Writing Strategy

Following Andrew Ng's three-step reflection workflow, write the prompts:

- Step 1: Require the AI to perform a literal translation.

- Step 2: Require the AI to evaluate the literal translation and provide optimization suggestions.

- Step 3: Require the AI to perform a free translation based on the optimization suggestions.

The difference is to strongly emphasize that the returned content must be in valid SRT format, although it may not always comply perfectly.

Building a Simple API

One issue with the three-step reflection mode is the significantly higher token consumption: prompts become longer, and output results become longer. Additionally, due to Gemini's rate limits, exceeding the frequency returns a 429 error, requiring a pause after each request.

We used Flask to build the backend API and Bootstrap 5 for a simple frontend single-page application. The overall interface is as follows:

Obviously, using Gemini in China requires a VPN.

- Lines to Translate Simultaneously: Refers to the number of subtitle lines in one translation request. If too large, it may exceed token limits and cause errors; if too small, it's meaningless. Recommended range is 30-100, default is 50.

- Pause After Translation (seconds): Prevents too frequent requests leading to 429 errors. It will pause for 10 seconds after each request returns before initiating the next one.

Return Result Example:

<step1_initial_translation>

1

00:00:01,950 --> 00:00:04,430

Several molecules have been discovered in the five-star system,

2

00:00:04,720 --> 00:00:06,780

We are still multiple universes away from third-type contact.

3

00:00:07,260 --> 00:00:09,880

Weibo has been carrying out filming missions for years now,

4

00:00:10,140 --> 00:00:12,920

Many previously difficult-to-capture photos have been transmitted recently.

5

00:00:13,440 --> 00:00:17,500

In early June, astronomers published this photo in Nature,

6

00:00:18,040 --> 00:00:19,180

Outside the blue core,

7

00:00:19,360 --> 00:00:21,380

There\'s also this circle of orange light,

8

00:00:21,900 --> 00:00:23,740

This is a new drama-scale sweet donut,

9

00:00:24,380 --> 00:00:25,640

This is a portal.

10

00:00:26,280 --> 00:00:28,100

This is the generation ring of an alien civilization,

</step1_initial_translation>

<step2_reflection>

* **Line 1:** "Five-star system" is likely a mistranslation. It probably refers to a five-member committee or group, not a star system. Clarify the context.

* **Line 2:** "Multiple universes" seems like an over-exaggeration. Rephrase for clarity and accuracy.

* **Line 3:** "Weibo" should be explained as a Chinese social media platform. "Filming missions" is unclear. Does it mean "posting videos/images"?

* **Line 8:** "Drama-scale sweet donut" is a nonsensical literal translation. Figure out the intended meaning.

* **Line 9:** "Portal" seems out of context. Verify the intended meaning.

* **Line 10:** "Generation ring" is likely a mistranslation. Clarify the context.

* **Line 11:** "Organic polycyclic aromatic hydrocarbons" is overly technical for a general audience. Simplify if possible.

* **Line 12 and 14:** Use the correct formatting for the galaxy\'s name: SPT0418-47.

* **Line 15:** "It hasn\'t been shortened" is awkward. Remove or rephrase.

* **Line 28:** The name of the organization and the resource should be translated more naturally and accurately. Consider breaking this long line into two for better readability.

* **Line 29:** "Cute plush dolls" may sound childish. Consider rephrasing as "animated characters" or similar.

* **Line 35:** "James Webb Space Telescope" should be used consistently throughout. Shortening to "Webb Telescope" after the first mention is acceptable.

* **Line 44:** "SPD048" is likely a typo. It should be SPT0418-47 to be consistent.

* **Line 45-46:** "Standard beautiful photo" is redundant. Simplify to "beautiful photo".

* **Line 48:** "Grovitational Lenshin" is a typo. Correct to "Gravitational Lensing".

* **Line 50:** The sentence is incomplete. Finish the thought.

</step2_reflection>

<step3_refined_translation>

1

00:00:01,950 --> 00:00:04,430

Several molecules have been discovered in the five-member group\'s area of focus.

2

00:00:04,720 --> 00:00:06,780

We are still far from making contact with extraterrestrial life.

3

00:00:07,260 --> 00:00:09,880

The James Webb Space Telescope has been capturing images for a year now,

4

00:00:10,140 --> 00:00:12,920

and has recently transmitted many previously unseen photos.

5

00:00:13,440 --> 00:00:17,500

In early June, astronomers published this image in Nature.

6

00:00:18,040 --> 00:00:19,180

Outside the blue core,

7

00:00:19,360 --> 00:00:21,380

there\'s a ring of orange light.

8

00:00:21,900 --> 00:00:23,740

This is a large, ring-shaped structure.

9

00:00:24,380 --> 00:00:25,640

This is being investigated.

10

00:00:26,280 --> 00:00:28,100

This is thought to be a sign of an early galaxy.

</step3_refined_translation>Extract the text within the <step3_refined_translation></step3_refined_translation> tags from the result, which is the final translation.

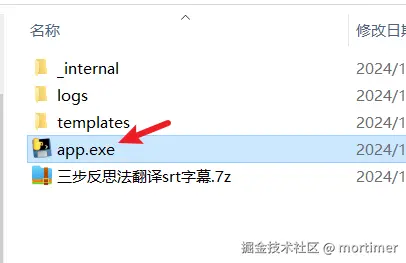

Simple Package for Local Trial

Directly download, extract, and double-click app.exe to automatically open the above UI in your browser. Enter your Gemini API Key, proxy address, select the SRT subtitle file to translate, and choose the target language to test the results.

Q1: How is the Reflection Workflow Different from Traditional Machine Translation?

A1: The reflection workflow introduces self-evaluation and optimization mechanisms, simulating the thought process of human translators, resulting in more accurate and natural translations.

Q2: How Long Does the Reflection Workflow Take?

A2: Although the reflection workflow requires multiple AI processing steps, it typically only takes 10–20 seconds longer than traditional methods. Considering the improvement in translation quality, this time investment is worthwhile.

Q3: Does the Reflection Workflow Guarantee Legitimate SRT Output?

A3: No, issues like blank lines or mismatched subtitle counts can still occur. For example, if a subsequent subtitle has only 3-5 words and is grammatically continuous with the previous one, the translation might merge them into a single subtitle.

Added a feature to the tool: it now supports simultaneous upload of video or audio files. Using Gemini, it can transcribe audio/video to subtitles and perform translation, returning the translated results.

Gemini's large model supports both text and audio/video inputs, allowing a single request to transcribe audio/video to subtitles and translate them.

For example, sending an English-spoken video to Gemini and specifying translation to Chinese will return Chinese subtitles.

1. Translate Subtitles Only

Paste SRT subtitle content in the left text box or click the "Upload SRT Subtitle" button to select a subtitle file from your local computer.

Then set the target language, and use the "Three-Step Reflection Translation" to command Gemini to perform the translation task. The result will be output in the right text box. Click the "Download" button in the lower right corner to save it as an SRT file locally.

2. Transcribe Audio/Video to Subtitles

Click the "Upload Audio/Video for Transcription to Subtitles" button on the left, select any audio or video file to upload. After uploading, submit. Gemini will process it and return the subtitle content recognized from the speech in the audio/video, with good results.

If a target language is specified, Gemini will translate the recognized results into that language before returning, completing both subtitle generation and translation in one step.

Download Link:

https://github.com/jianchang512/ai2srt/releases/download/v0.2/windows-ai2srt-0.2.7z