Video translation software typically includes multiple speech recognition channels to transcribe human speech from audio or video into subtitle files. For Chinese and English, these tools perform reasonably well, but their effectiveness declines when applied to low-resource languages like Japanese, Korean, or Indonesian.

This is because large language models from abroad are primarily trained on English data, and even Chinese performance is suboptimal. Similarly, domestic models focus mainly on Chinese and English, with Chinese data dominating.

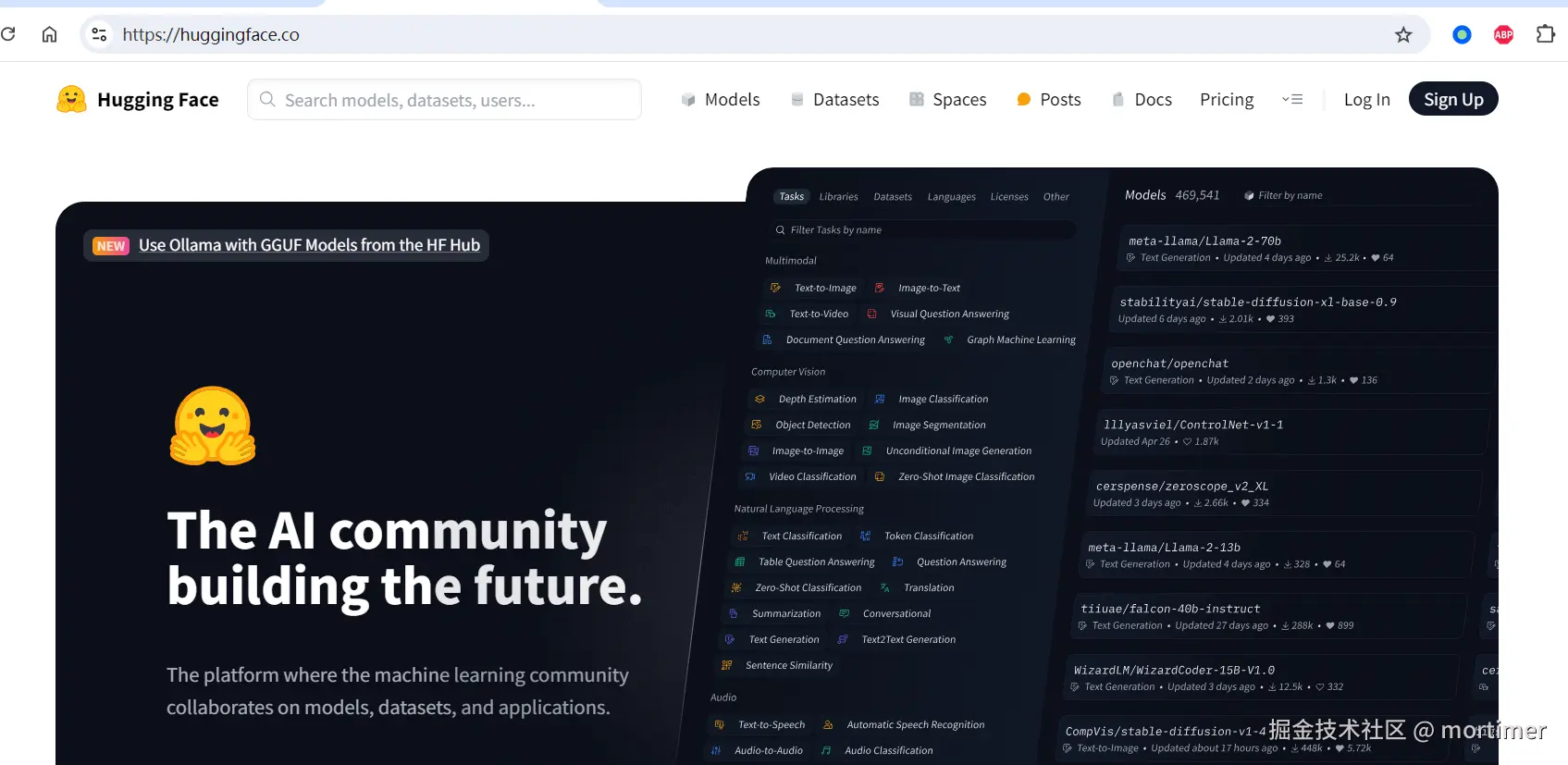

The lack of training data leads to poor model performance. Fortunately, the Hugging Face website at https://huggingface.co hosts a vast collection of fine-tuned models, including many optimized for low-resource languages, which deliver impressive results.

This article will guide you on how to use Hugging Face models in video translation software to recognize low-resource languages, using Japanese as an example.

1. Access via VPN

Due to network restrictions, https://huggingface.co is not directly accessible from some regions. You need to configure your network environment to ensure access to the site.

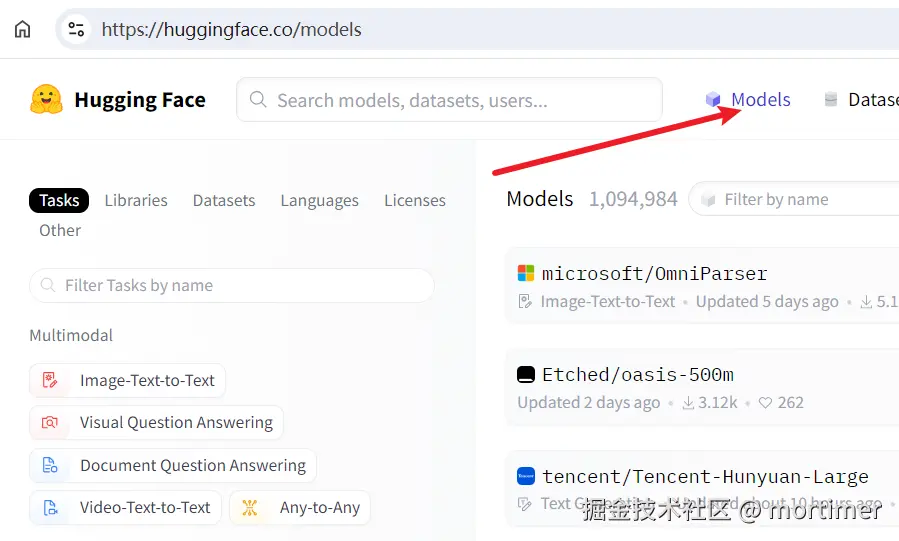

Once accessed, you will see the Hugging Face homepage.

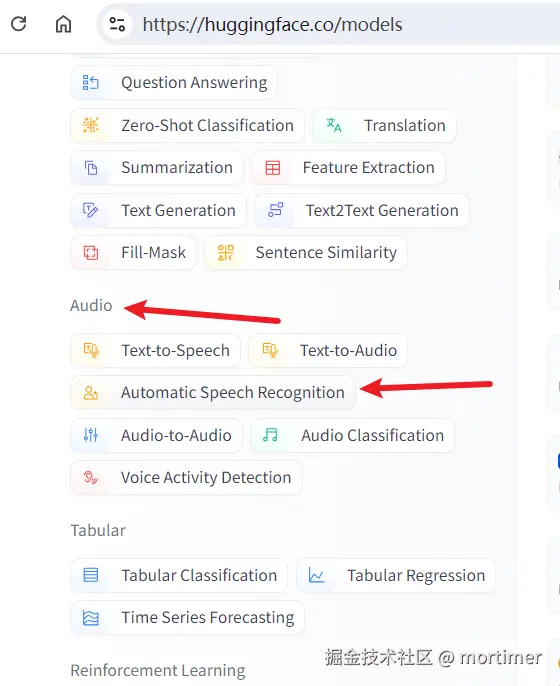

2. Navigate to the Models Section

Click on the "Automatic Speech Recognition" category in the left navigation bar to display all speech recognition models on the right.

3. Find Models Compatible with faster-whisper

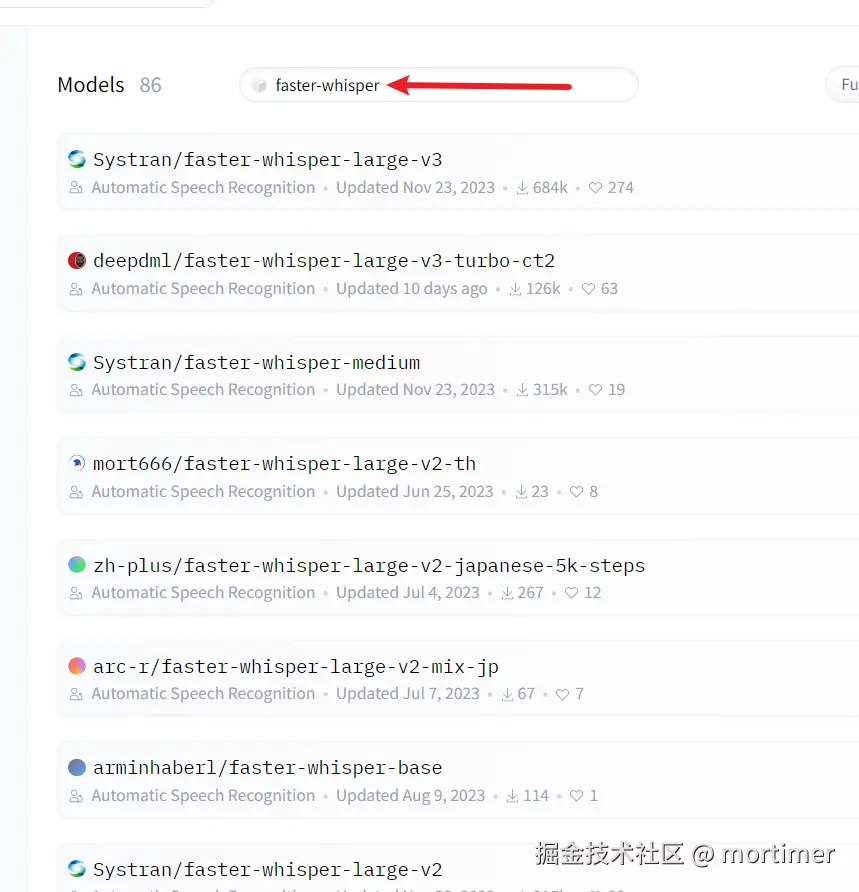

Hugging Face currently hosts 20,384 speech recognition models, but not all are compatible with video translation software. Different models output data in varying formats, and the software only supports faster-whisper type models.

- Enter "faster-whisper" in the search box to search.

Most models in the search results can be used in video translation software.

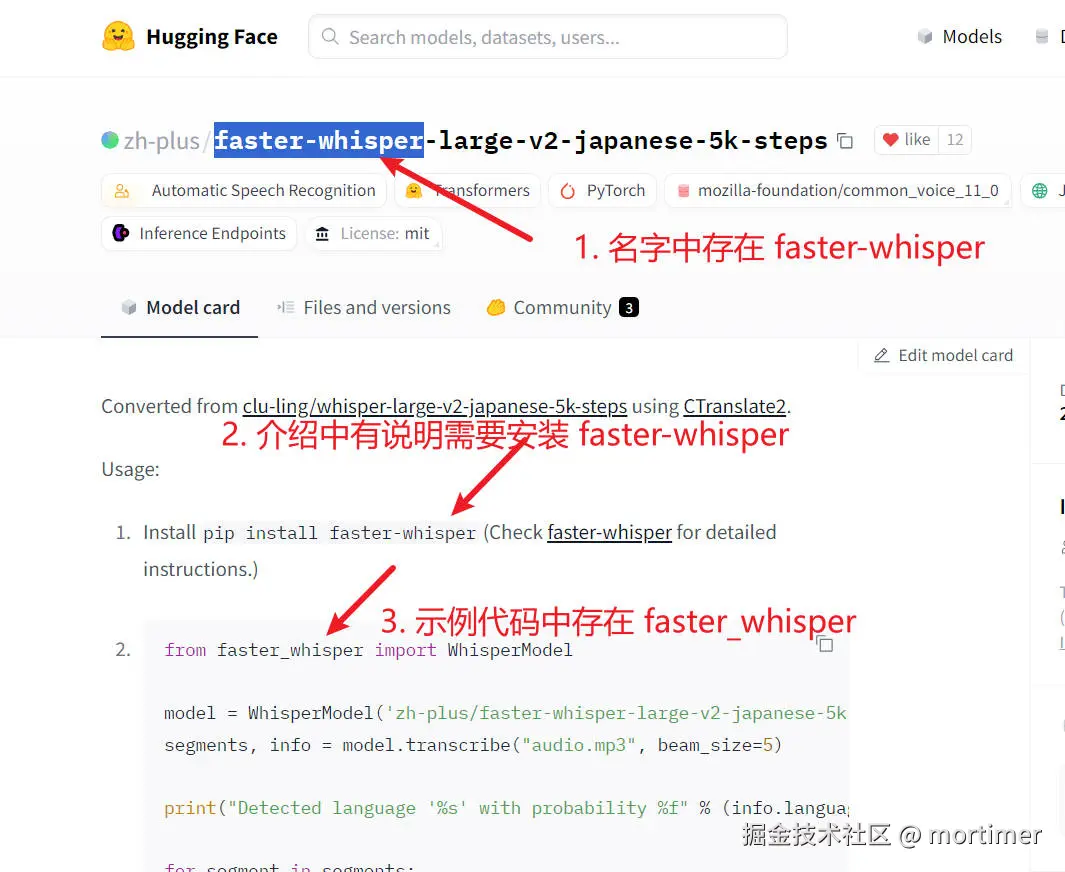

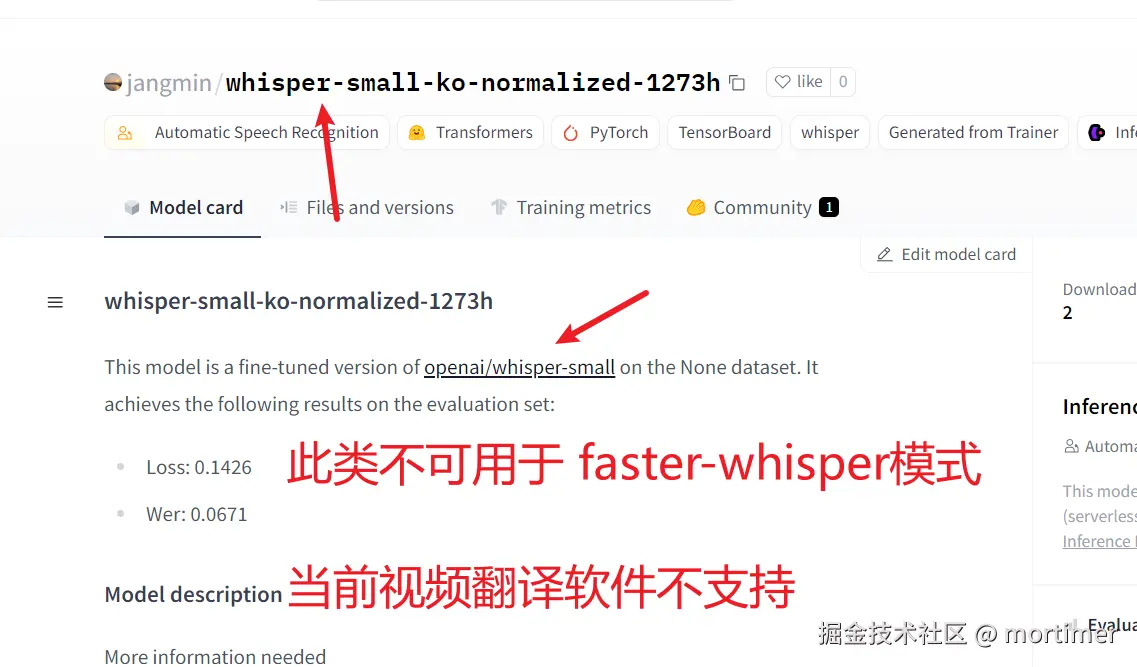

However, some models compatible with faster-whisper may not include "faster-whisper" in their names. How can you find these?

- Search by language name, such as "japanese," then check the model details page to see if it specifies compatibility with faster-whisper.

If the model name or description does not explicitly mention faster-whisper, it is not usable. Even if terms like "whisper" or "whisper-large" appear, they are not compatible, as "whisper" refers to openai-whisper mode, which is not currently supported by the video translation software. Future support is uncertain and depends on circumstances.

4. Copy the Model ID to the Video Translation Software

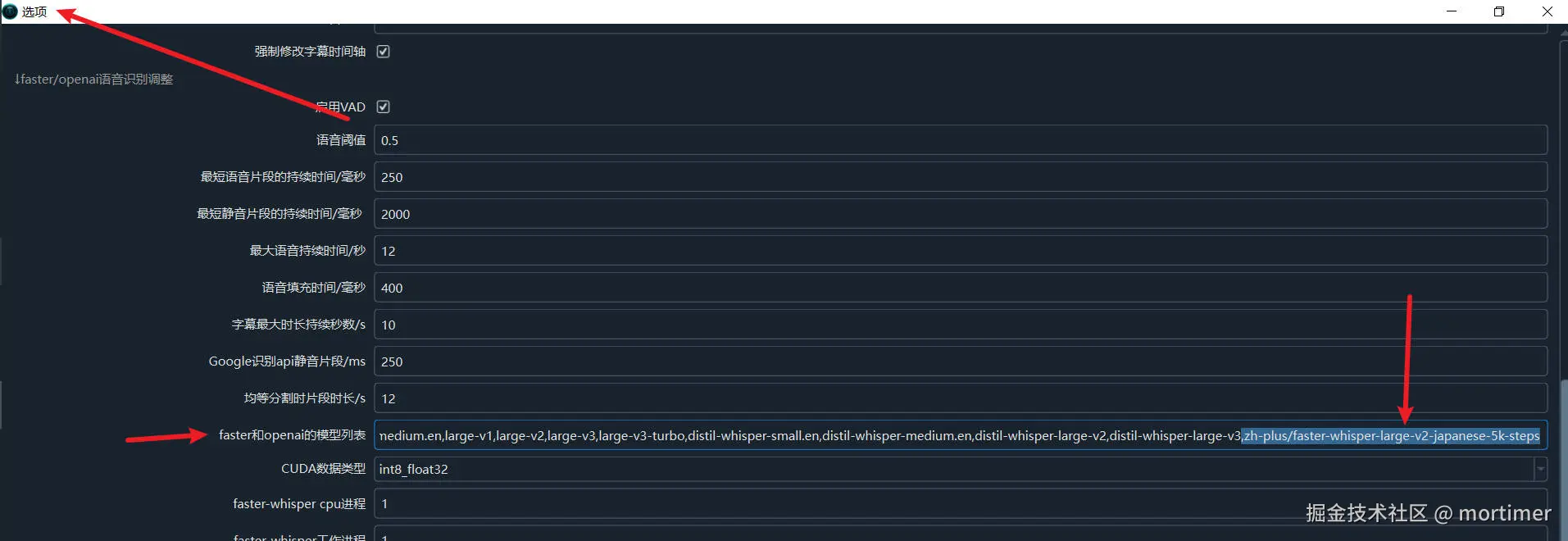

Once you find a suitable model, copy its Model ID and paste it into the video translation software under "Menu" -> "Tools" -> "Advanced Options" -> "faster and openai model list."

Copy the Model ID.

Paste it into the video translation software.

Save the settings.

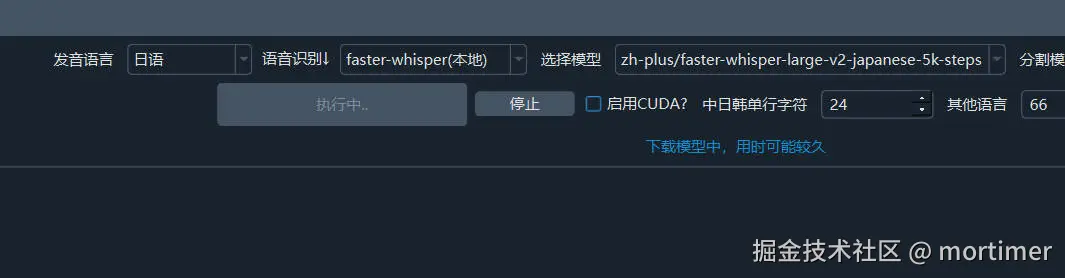

5. Select the faster-whisper Mode

In the speech recognition channel, choose the model you just added. If it doesn't appear, restart the software.

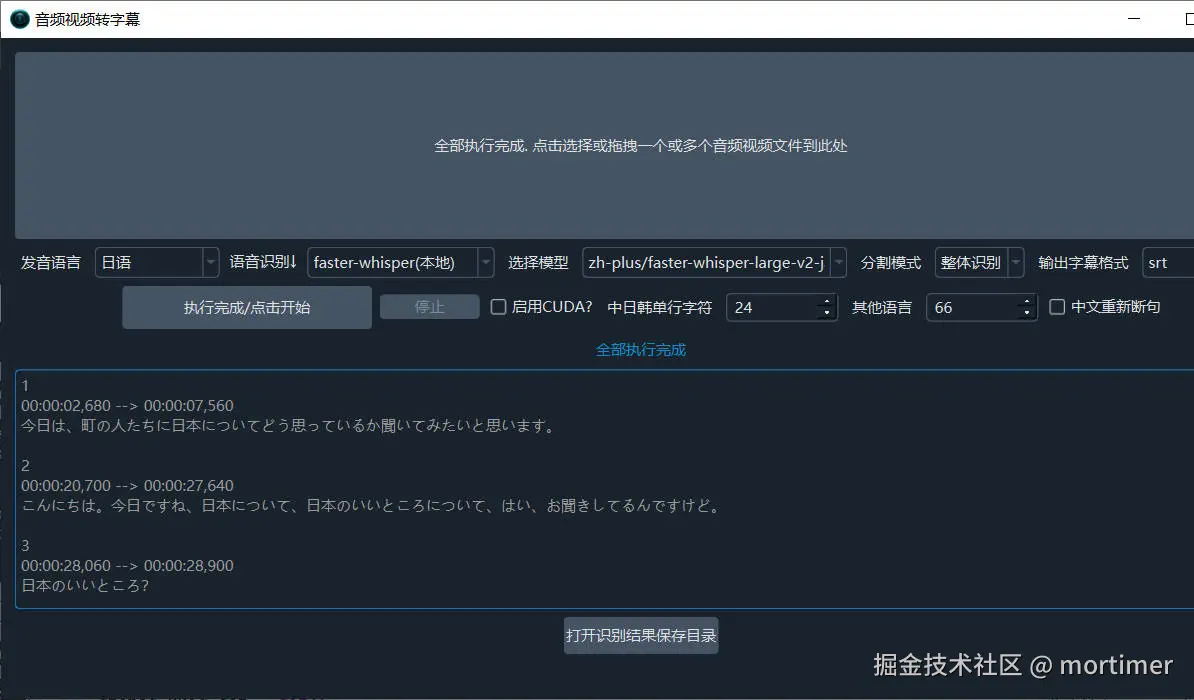

After selecting the model and speech language, you can start recognition.

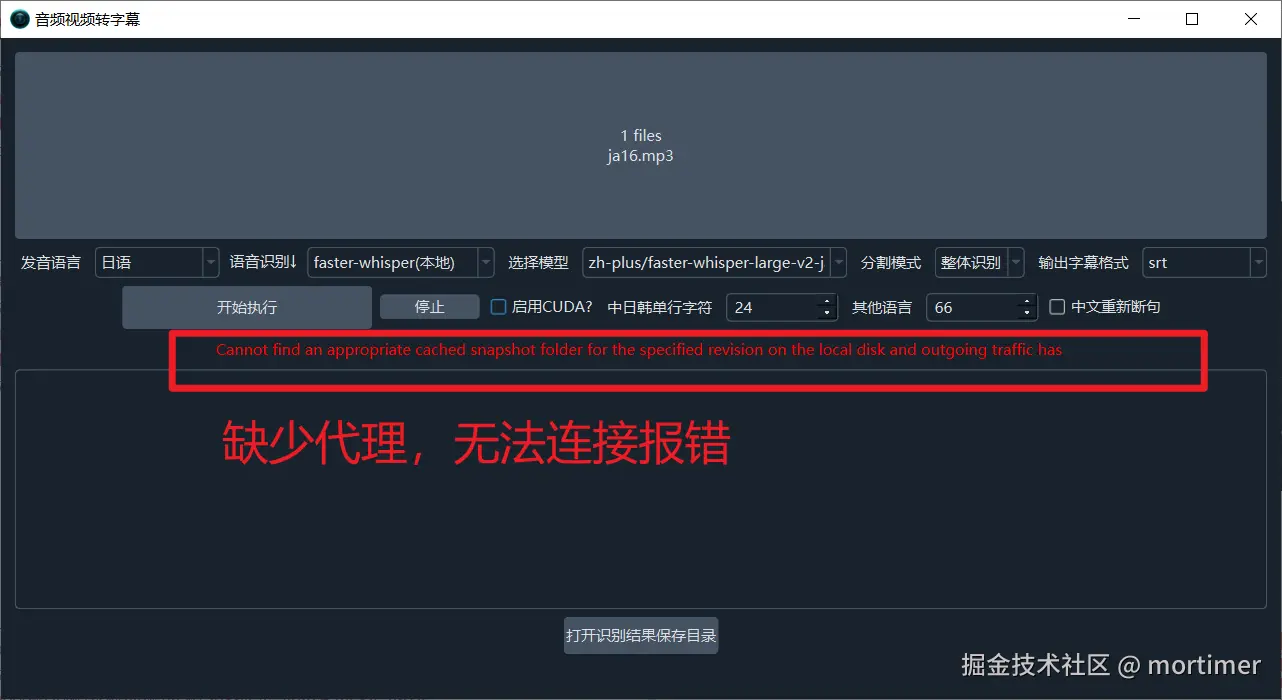

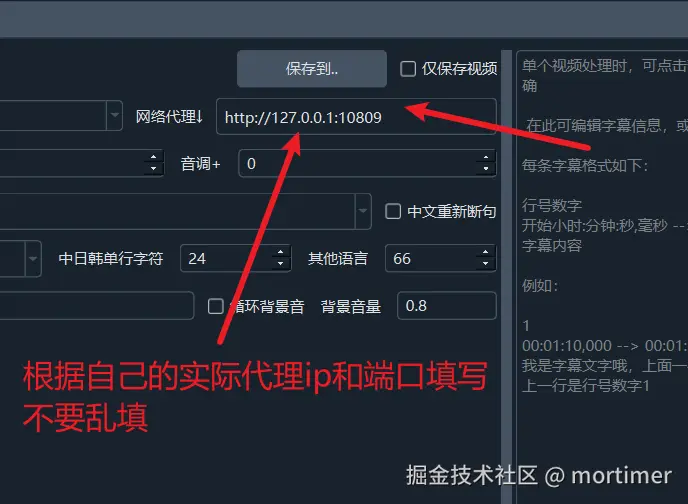

Note: A proxy must be set up; otherwise, connection errors will occur. Try setting a global or system proxy on your computer. If errors persist, enter the proxy IP and port in the "Network Proxy" text box on the main interface.

For an explanation of network proxies, visit https://pyvideotrans.com/proxy

Depending on your network, the download may take some time. As long as no red error messages appear, please wait patiently.