Why Audio, Subtitles, and Video Become Desynchronized

When translating between different languages, sentence length and pronunciation duration typically change. For instance, translating from Chinese to English will undoubtedly result in different sentence lengths and, consequently, different times required to pronounce them.

ZH: 有多远滚多远 EN: Get out of here as far as you can!

ZH: 滚远点 JP: ここから出て行け。

If the original Chinese audio takes 2 seconds to pronounce, but the translated English voiceover takes 4 seconds, this inevitably leads to desynchronization.

How to Synchronize Them - Prioritizing Synchronization Over Quality

As mentioned above, if the duration before translation is 2 seconds and after translation is 4 seconds, and you only need them to be synchronized without caring about speaking speed or video playback speed, you can directly speed up the audio by 2 times. The 4-second duration will then be shortened to 2 seconds, achieving natural synchronization. Alternatively, you can slow down the video, extending the original 2-second segment to 4 seconds, which also achieves alignment.

Specific Steps for Audio Speed-up Synchronization:

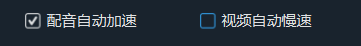

In the software interface, select "Auto Audio Speed-up" and deselect "Auto Video Slow-down."

Go to Menu > Tools > Options, and set the maximum audio speed-up factor to

100.This will achieve synchronization, but the drawback is obvious: inconsistent speaking speed.

Specific Steps for Video Slow-down Synchronization:

Deselect "Auto Audio Speed-up" in the software interface and select "Auto Video Slow-down."

Go to Menu > Tools > Options, and set the maximum video slow-down factor to 20.

This also achieves alignment, with the speaking speed remaining constant while the video slows down. However, the video will similarly become inconsistently paced.

If you merely want simple alignment and are not concerned about the overall effect, you can use either of these two methods.

Better, More Acceptable Synchronization Methods

Clearly, the synchronization methods described above are not practical. Audio that is too fast or video that is too slow is generally unacceptable and provides a poor user experience. For better results, you can enable both "Auto Audio Speed-up" and "Auto Video Slow-down" simultaneously.

Specific Steps:

When selecting Faster mode or OpenAI mode, try to use the medium or a larger model, and choose "Overall Recognition."

In the software interface, select both "Auto Audio Speed-up" and "Auto Video Slow-down," and set a relatively small overall acceleration value, such as 10%.

Go to Menu > Tools > Options, and set the maximum audio speed-up factor to 1.8 (i.e., the maximum speaking speed can be accelerated to 1.8 times the normal speed). You can manually change this to values greater than 1, such as 2 or 1.5.

Go to Menu > Tools > Options, and set the maximum video acceleration factor to 2; this means it can be slowed down to 0.05 times its normal speed. You can manually adjust this to values greater than 1, such as 3 or 5.

Even after performing steps 1-3, misalignment may still occur because maximum values are set. If alignment is not achieved when these maximums are reached, the system will give up and simply extend the duration. In such cases, you can continue to adjust the video and subtitle-related options in Menu > Tools > Options.

Is There a Perfect Synchronization Method?

Aside from manual intervention, such as condensing translations or adding transition frames, no perfectly automated method has yet been found.

To simultaneously ensure "acceptable audio acceleration range," "acceptable video slow-down range," and "lip-sync (matching mouth movements with speech onset)" through automated programming in both very long and very short videos, across any language translation and dubbing, currently appears to be an impossible task. Apart from manual adjustments, there is no perfect method.