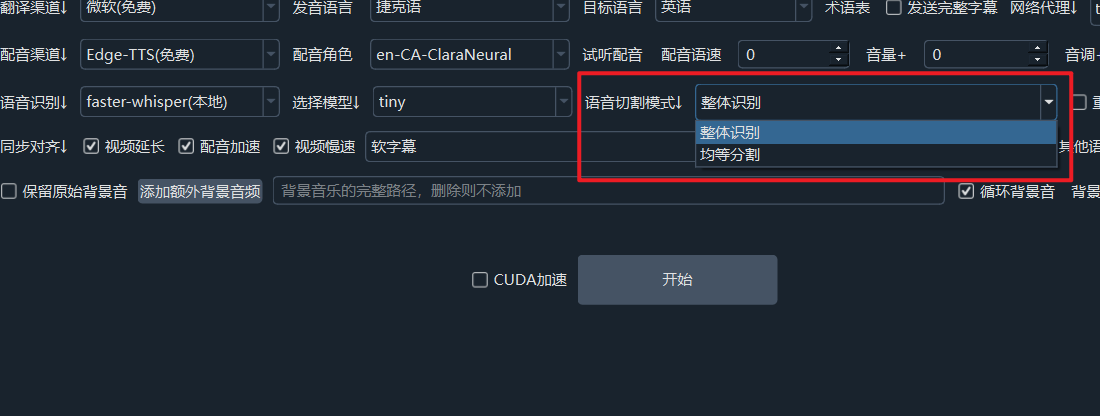

整体识别、均等分割的区别

整体识别:

这是语音识别效果最好的,也是最消耗计算机资源的,如果视频比较大,又使用了 large-v3 模型,可能导致闪退。

在识别时,将整个语音文件传递给模型, 由模型内部使用 VAD 进行切分识别和断句,默认静音分割 200ms,最大语句长度3s,可在菜单--工具/选项--高级选项--VAD区域进行配置

均等分割:

顾名思义,这是按照固定长度,将语音文件切割为同样长度,再传递给模型,同时 OpenAI 模型下会强制使用均等分割,即在使用OpenAI模型时,不管你选择的是“整体识别”还是“预先分割”,都会强制使用“均等分割”。

均等分割每个片段是10s,静音分割语句间隔是500ms,可在菜单--工具/选项-高级选项--VAD区域进行配置

注意: 设定了10s,每个字幕大体上都是10s时长,但并非每个配音长度一定是10s,发音时长以及会移除配音末尾的静音。