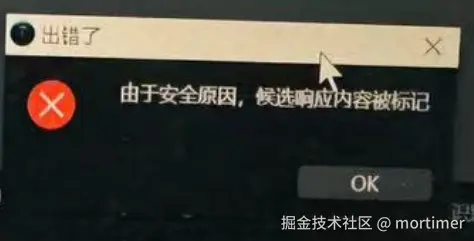

When using Gemini AI for translation or speech recognition tasks, you may sometimes encounter errors such as "response content flagged."

This occurs because Gemini imposes safety restrictions on the content it processes. While adjustments are possible in the code, and the most lenient "Block None" setting is applied, the final decision on filtering is determined by Gemini's comprehensive evaluation.

The adjustable safety filters in the Gemini API cover the following categories. Content outside these categories cannot be adjusted via code:

| Category | Description |

|---|---|

| Harassment | Negative or harmful comments targeting identity and/or protected attributes. |

| Hate Speech | Rude, disrespectful, or profane content. |

| Sexually Explicit | References to sexual acts or other obscene content. |

| Dangerous Content | Content that promotes, facilitates, or encourages harmful behavior. |

| Civic Integrity | Queries related to elections. |

The table below explains the blocking settings that can be configured in the code for each category.

For example, if you set the blocking threshold for the Hate Speech category to Block Only High, all content with a high probability of containing hate speech will be blocked. However, content with a low probability of dangerous content will be allowed.

| Threshold (Google AI Studio) | Threshold (API) | Description |

|---|---|---|

| Block None | BLOCK_NONE | Always show content regardless of the likelihood of unsafe content. |

| Block Only High | BLOCK_ONLY_HIGH | Block content when the probability of unsafe content is high. |

| Block Medium and Above | BLOCK_MEDIUM_AND_ABOVE | Block content when the likelihood of unsafe content is medium or higher. |

| Block Low and Above | BLOCK_LOW_AND_ABOVE | Block content when the likelihood of unsafe content is low, medium, or high. |

| Not Applicable | HARM_BLOCK_THRESHOLD_UNSPECIFIED | Threshold not specified; default threshold is used for blocking. |

You can enable BLOCK_NONE in the code with the following settings:

safetySettings = [

{

"category": HarmCategory.HARM_CATEGORY_HARASSMENT,

"threshold": HarmBlockThreshold.BLOCK_NONE,

},

{

"category": HarmCategory.HARM_CATEGORY_HATE_SPEECH,

"threshold": HarmBlockThreshold.BLOCK_NONE,

},

{

"category": HarmCategory.HARM_CATEGORY_SEXUALLY_EXPLICIT,

"threshold": HarmBlockThreshold.BLOCK_NONE,

},

{

"category": HarmCategory.HARM_CATEGORY_DANGEROUS_CONTENT,

"threshold": HarmBlockThreshold.BLOCK_NONE,

},

]

model = genai.GenerativeModel('gemini-2.0-flash-exp')

model.generate_content(

message,

safety_settings=safetySettings

)However, note that even if all settings are configured as BLOCK_NONE, Gemini may still filter content based on contextual safety evaluations.

How to Reduce the Likelihood of Safety Restrictions?

Generally, the flash series models have more safety restrictions, while the pro and thinking series models are relatively less restrictive. Try switching between different models.

Additionally, when dealing with potentially sensitive content, sending smaller amounts of content at a time and reducing the context length can help lower the frequency of safety filtering.

How to Completely Disable Gemini's Safety Judgments and Allow All Content?

Link a foreign credit card and switch to a monthly-paid premium account.