Most voiceover enthusiasts know that Microsoft's edge-tts is a powerful and free text-to-speech tool. Its only downside is the increasing traffic restrictions in China, but this can be bypassed by deploying it on Cloudflare, while also taking advantage of Cloudflare's free server and bandwidth resources.

First, see the results. After completion, you will have a voiceover API interface and a web voiceover interface.

This is the web interface.

const requestBody = {

"model": "tts-1",

"input": 'This is the text to synthesize into speech',

"voice": 'zh-CN-XiaoxiaoNeural',

"response_format": "mp3",

"speed": 1.0

};

const response = await fetch('URL after deploying to Cloudflare', {

method: 'POST',

headers: {

'Content-Type': 'application/json',

'Authorization': `Bearer Your deployed key, can be arbitrary`,

},

body: JSON.stringify(requestBody),

});This is the JS function for calling the interface, compatible with the OpenAI TTS interface.

Now, let's discuss how to deploy it on Cloudflare.

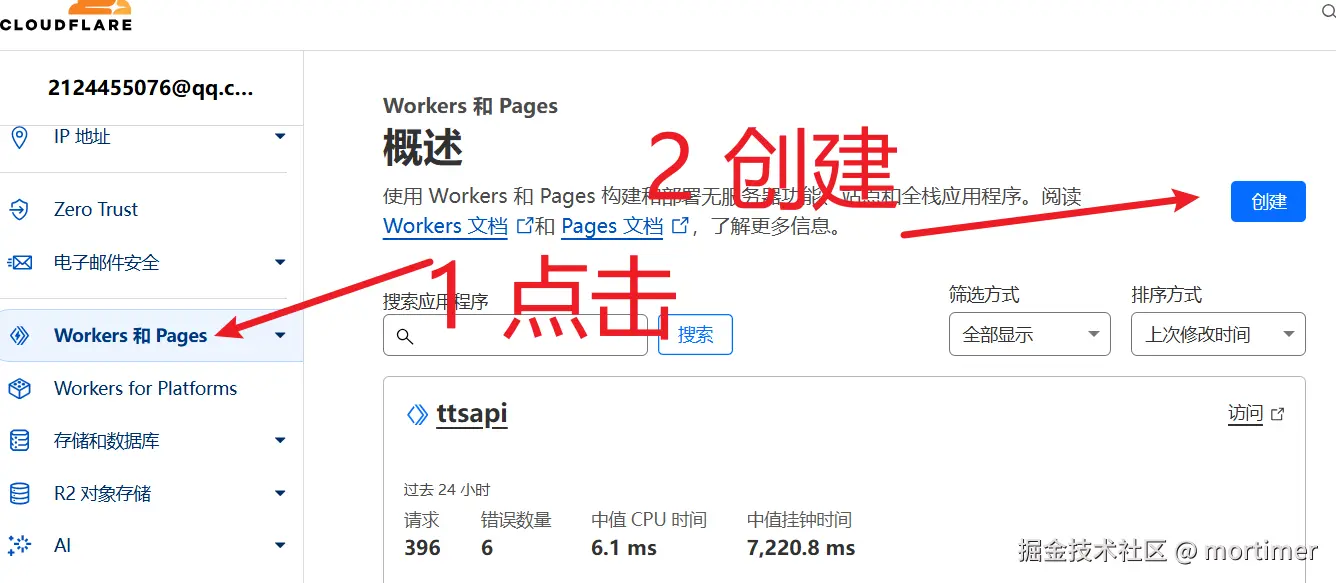

Log in to Cloudflare and Create a Worker

URL: https://dash.cloudflare.com/ (How to log in and register is omitted)

After logging in, click Workers and Pages on the left to open the creation page.

Click Create.

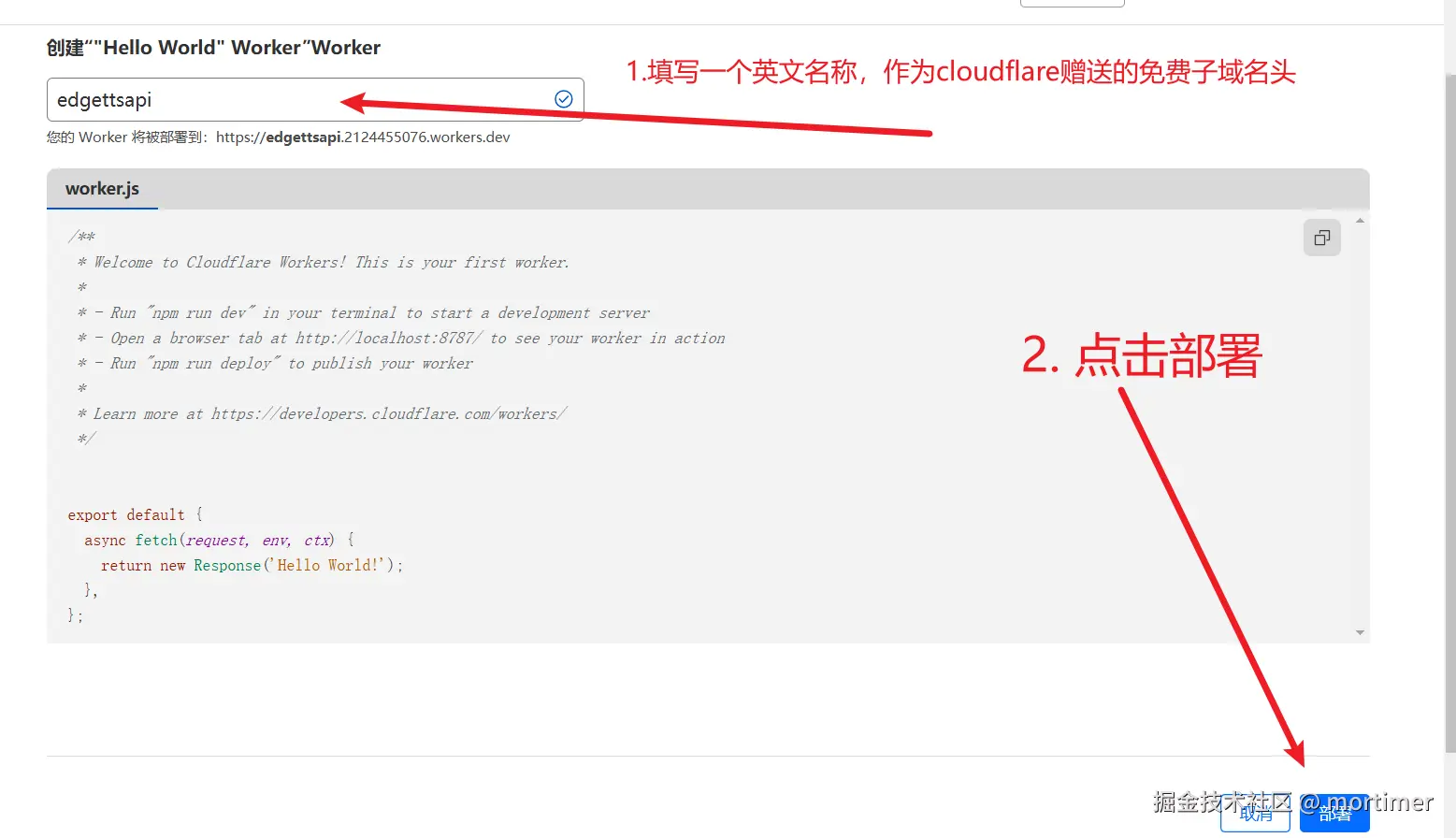

Then, enter an English name in the input box as the free subdomain prefix provided by Cloudflare.

After clicking Deploy in the bottom right, on the new page, click Edit Code to enter the core step. Copy the code.

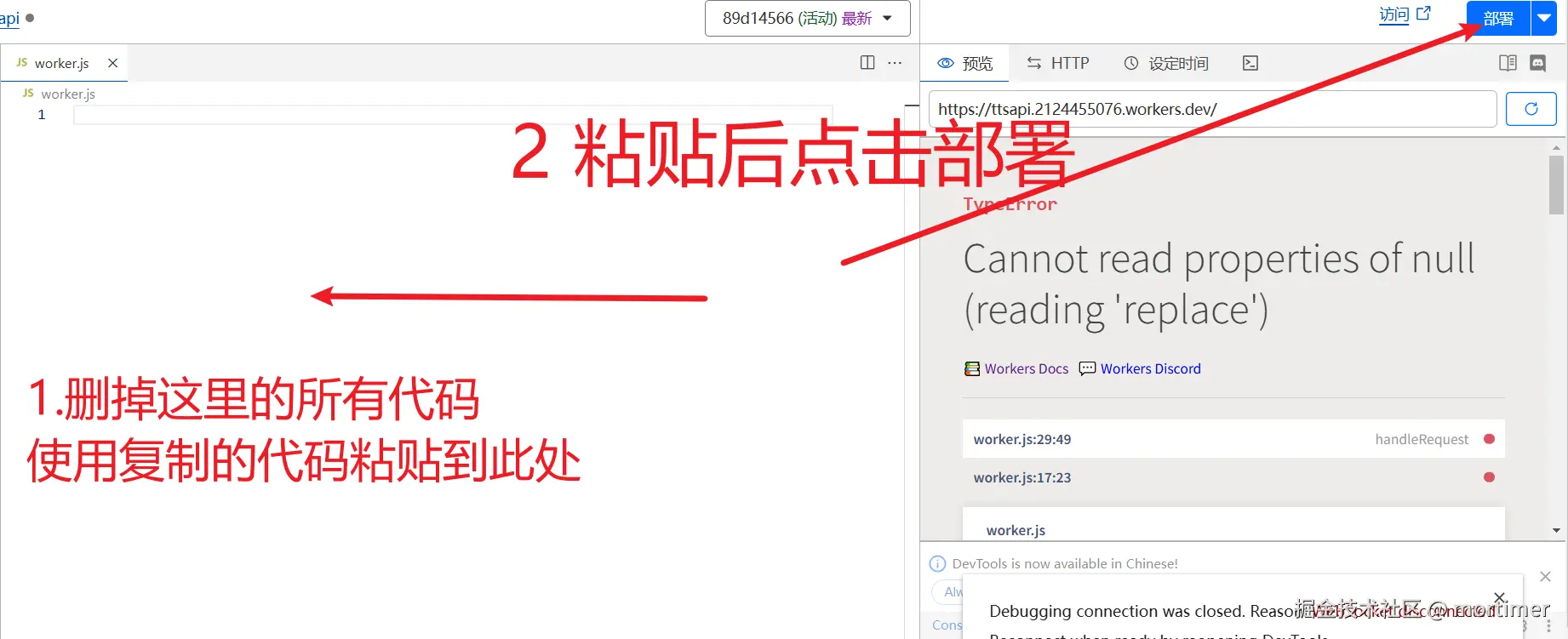

Delete all the existing code and replace it with the code below.

// Custom API key to prevent abuse

const API_KEY = '';

const encoder = new TextEncoder();

let expiredAt = null;

let endpoint = null;

let clientId = "";

const TOKEN_REFRESH_BEFORE_EXPIRY = 3 * 60;

let tokenInfo = {

endpoint: null,

token: null,

expiredAt: null

};

addEventListener("fetch", event => {

event.respondWith(handleRequest(event.request));

});

async function handleRequest(request) {

if (request.method === "OPTIONS") {

return handleOptions(request);

}

const authHeader = request.headers.get("authorization") || request.headers.get("x-api-key");

const apiKey = authHeader?.startsWith("Bearer ")

? authHeader.slice(7)

: null;

// Only validate if API_KEY is set

if (API_KEY && apiKey !== API_KEY) {

return new Response(JSON.stringify({

error: {

message: "Invalid API key. Use 'Authorization: Bearer your-api-key' header",

type: "invalid_request_error",

param: null,

code: "invalid_api_key"

}

}), {

status: 401,

headers: {

"Content-Type": "application/json",

...makeCORSHeaders()

}

});

}

const requestUrl = new URL(request.url);

const path = requestUrl.pathname;

if (path === "/v1/audio/speech") {

try {

const requestBody = await request.json();

const {

model = "tts-1",

input,

voice = "zh-CN-XiaoxiaoNeural",

response_format = "mp3",

speed = '1.0',

volume='0',

pitch = '0', // Add pitch parameter, default 0

style = "general" // Add style parameter, default general

} = requestBody;

let rate = parseInt(String( (parseFloat(speed)-1.0)*100) );

let numVolume = parseInt( String(parseFloat(volume)*100) );

let numPitch = parseInt(pitch);

const response = await getVoice(

input,

voice,

rate>=0?`+${rate}%`:`${rate}%`,

numPitch>=0?`+${numPitch}Hz`:`${numPitch}Hz`,

numVolume>=0?`+${numVolume}%`:`${numVolume}%`,

style,

"audio-24khz-48kbitrate-mono-mp3"

);

return response;

} catch (error) {

console.error("Error:", error);

return new Response(JSON.stringify({

error: {

message: error.message,

type: "api_error",

param: null,

code: "edge_tts_error"

}

}), {

status: 500,

headers: {

"Content-Type": "application/json",

...makeCORSHeaders()

}

});

}

}

// Default 404 response

return new Response("Not Found", { status: 404 });

}

async function handleOptions(request) {

return new Response(null, {

status: 204,

headers: {

...makeCORSHeaders(),

"Access-Control-Allow-Methods": "GET,HEAD,POST,OPTIONS",

"Access-Control-Allow-Headers": request.headers.get("Access-Control-Request-Headers") || "Authorization"

}

});

}

async function getVoice(text, voiceName = "zh-CN-XiaoxiaoNeural", rate = '+0%', pitch = '+0Hz', volume='+0%', style = "general", outputFormat = "audio-24khz-48kbitrate-mono-mp3") {

try {

const maxChunkSize = 2000;

const chunks = text.trim().split("\n");

let audioChunks = [];

while(chunks.length > 0){

try{

let audio_chunk = await getAudioChunk(chunks.shift(), voiceName, rate, pitch, volume, style, outputFormat);

audioChunks.push(audio_chunk);

} catch(e) {

return new Response(JSON.stringify({

error: {

message: String(e),

type: "api_error",

param: `${voiceName}, ${rate}, ${pitch}, ${volume}, ${style}, ${outputFormat}`,

code: "edge_tts_error"

}

}), {

status: 500,

headers: {

"Content-Type": "application/json",

...makeCORSHeaders()

}

});

}

}

// Concatenate audio chunks

const concatenatedAudio = new Blob(audioChunks, { type: 'audio/mpeg' });

const response = new Response(concatenatedAudio, {

headers: {

"Content-Type": "audio/mpeg",

...makeCORSHeaders()

}

});

return response;

} catch (error) {

console.error("Speech synthesis failed:", error);

return new Response(JSON.stringify({

error: {

message: error,

type: "api_error",

param: null,

code: "edge_tts_error " + voiceName

}

}), {

status: 500,

headers: {

"Content-Type": "application/json",

...makeCORSHeaders()

}

});

}

}

// Get single audio data

async function getAudioChunk(text, voiceName, rate, pitch, volume, style, outputFormat = 'audio-24khz-48kbitrate-mono-mp3') {

const endpoint = await getEndpoint();

const url = `https://${endpoint.r}.tts.speech.microsoft.com/cognitiveservices/v1`;

let m = text.match(/\[(\d+)\]\s*?$/);

let slien = 0;

if (m && m.length == 2) {

slien = parseInt(m[1]);

text = text.replace(m[0], '');

}

const response = await fetch(url, {

method: "POST",

headers: {

"Authorization": endpoint.t,

"Content-Type": "application/ssml+xml",

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/127.0.0.0 Safari/537.36 Edg/127.0.0.0",

"X-Microsoft-OutputFormat": outputFormat

},

body: getSsml(text, voiceName, rate, pitch, volume, style, slien)

});

if (!response.ok) {

const errorText = await response.text();

throw new Error(`Edge TTS API error: ${response.status} ${errorText}`);

}

return response.blob();

}

function getSsml(text, voiceName, rate, pitch, volume, style, slien = 0) {

let slien_str = '';

if (slien > 0) {

slien_str = `<break time="${slien}ms" />`;

}

return `<speak xmlns="http://www.w3.org/2001/10/synthesis" xmlns:mstts="http://www.w3.org/2001/mstts" version="1.0" xml:lang="zh-CN">

<voice name="${voiceName}">

<mstts:express-as style="${style}" styledegree="2.0" role="default">

<prosody rate="${rate}" pitch="${pitch}" volume="${volume}">${text}</prosody>

</mstts:express-as>

${slien_str}

</voice>

</speak>`;

}

async function getEndpoint() {

const now = Date.now() / 1000;

if (tokenInfo.token && tokenInfo.expiredAt && now < tokenInfo.expiredAt - TOKEN_REFRESH_BEFORE_EXPIRY) {

return tokenInfo.endpoint;

}

// Get new token

const endpointUrl = "https://dev.microsofttranslator.com/apps/endpoint?api-version=1.0";

const clientId = crypto.randomUUID().replace(/-/g, "");

try {

const response = await fetch(endpointUrl, {

method: "POST",

headers: {

"Accept-Language": "zh-Hans",

"X-ClientVersion": "4.0.530a 5fe1dc6c",

"X-UserId": "0f04d16a175c411e",

"X-HomeGeographicRegion": "zh-Hans-CN",

"X-ClientTraceId": clientId,

"X-MT-Signature": await sign(endpointUrl),

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/127.0.0.0 Safari/537.36 Edg/127.0.0.0",

"Content-Type": "application/json; charset=utf-8",

"Content-Length": "0",

"Accept-Encoding": "gzip"

}

});

if (!response.ok) {

throw new Error(`Failed to get endpoint: ${response.status}`);

}

const data = await response.json();

const jwt = data.t.split(".")[1];

const decodedJwt = JSON.parse(atob(jwt));

tokenInfo = {

endpoint: data,

token: data.t,

expiredAt: decodedJwt.exp

};

return data;

} catch (error) {

console.error("Failed to get endpoint:", error);

// If cached token exists, try using it even if expired

if (tokenInfo.token) {

console.log("Using expired cached token");

return tokenInfo.endpoint;

}

throw error;

}

}

function addCORSHeaders(response) {

const newHeaders = new Headers(response.headers);

for (const [key, value] of Object.entries(makeCORSHeaders())) {

newHeaders.set(key, value);

}

return new Response(response.body, { ...response, headers: newHeaders });

}

function makeCORSHeaders() {

return {

"Access-Control-Allow-Origin": "*",

"Access-Control-Allow-Methods": "GET,HEAD,POST,OPTIONS",

"Access-Control-Allow-Headers": "Content-Type, x-api-key",

"Access-Control-Max-Age": "86400"

};

}

async function hmacSha256(key, data) {

const cryptoKey = await crypto.subtle.importKey(

"raw",

key,

{ name: "HMAC", hash: { name: "SHA-256" } },

false,

["sign"]

);

const signature = await crypto.subtle.sign("HMAC", cryptoKey, new TextEncoder().encode(data));

return new Uint8Array(signature);

}

async function base64ToBytes(base64) {

const binaryString = atob(base64);

const bytes = new Uint8Array(binaryString.length);

for (let i = 0; i < binaryString.length; i++) {

bytes[i] = binaryString.charCodeAt(i);

}

return bytes;

}

async function bytesToBase64(bytes) {

return btoa(String.fromCharCode.apply(null, bytes));

}

function uuid() {

return crypto.randomUUID().replace(/-/g, "");

}

async function sign(urlStr) {

const url = urlStr.split("://")[1];

const encodedUrl = encodeURIComponent(url);

const uuidStr = uuid();

const formattedDate = dateFormat();

const bytesToSign = `MSTranslatorAndroidApp${encodedUrl}${formattedDate}${uuidStr}`.toLowerCase();

const decode = await base64ToBytes("oik6PdDdMnOXemTbwvMn9de/h9lFnfBaCWbGMMZqqoSaQaqUOqjVGm5NqsmjcBI1x+sS9ugjB55HEJWRiFXYFw==");

const signData = await hmacSha256(decode, bytesToSign);

const signBase64 = await bytesToBase64(signData);

return `MSTranslatorAndroidApp::${signBase64}::${formattedDate}::${uuidStr}`;

}

function dateFormat() {

const formattedDate = (new Date()).toUTCString().replace(/GMT/, "").trim() + " GMT";

return formattedDate.toLowerCase();

}

// Add request timeout control

async function fetchWithTimeout(url, options, timeout = 30000) {

const controller = new AbortController();

const id = setTimeout(() => controller.abort(), timeout);

try {

const response = await fetch(url, {

...options,

signal: controller.signal

});

clearTimeout(id);

return response;

} catch (error) {

clearTimeout(id);

throw error;

}

}Note especially the top two lines of code to set the API key and prevent abuse.

// This is the API key for authentication

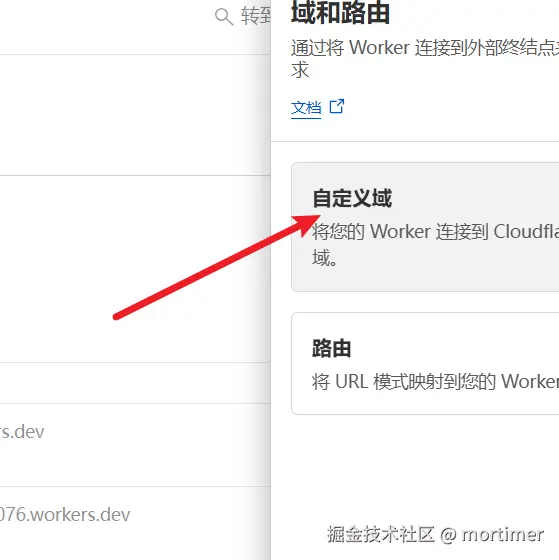

const API_KEY = '';Bind Your Own Domain

The default bound domain is https://subdomain-prefix.your-username.workers.dev/

Unfortunately, this domain is blocked in China. To use it without a VPN, you need to bind your own domain.

- If you haven't added your domain to Cloudflare yet, click

Add --> Existing Domainin the top right and enter your domain.

- If you've already added a domain to Cloudflare, click the name on the left to return to the management interface and add a custom domain.

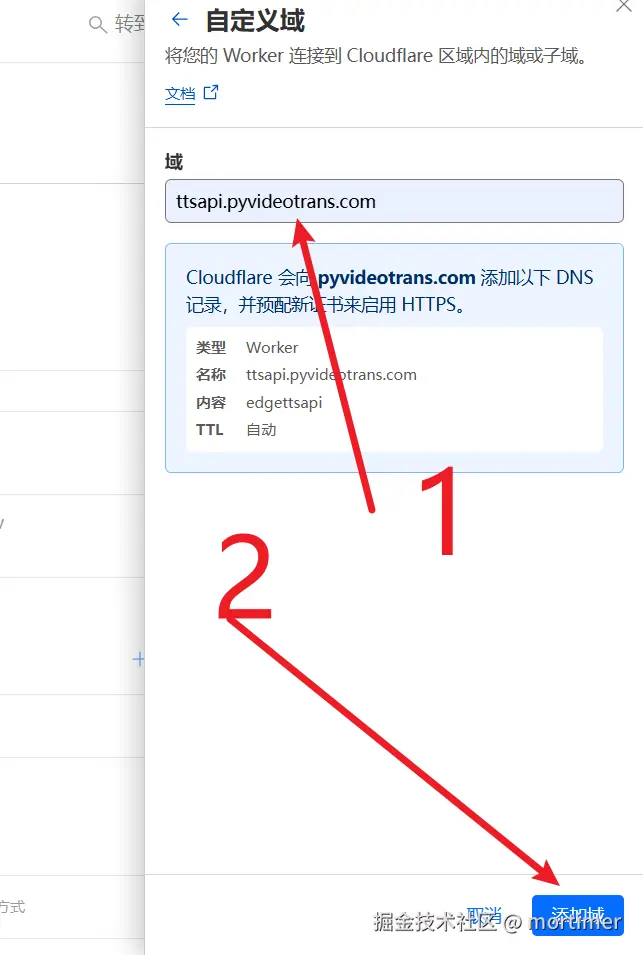

Click Settings --> Domains and Routes --> Add.

Then click Custom Domain and enter a subdomain of your domain already added to Cloudflare. For example, if my domain pyvideotrans.com is added to Cloudflare, I can enter ttsapi.pyvideotrans.com.

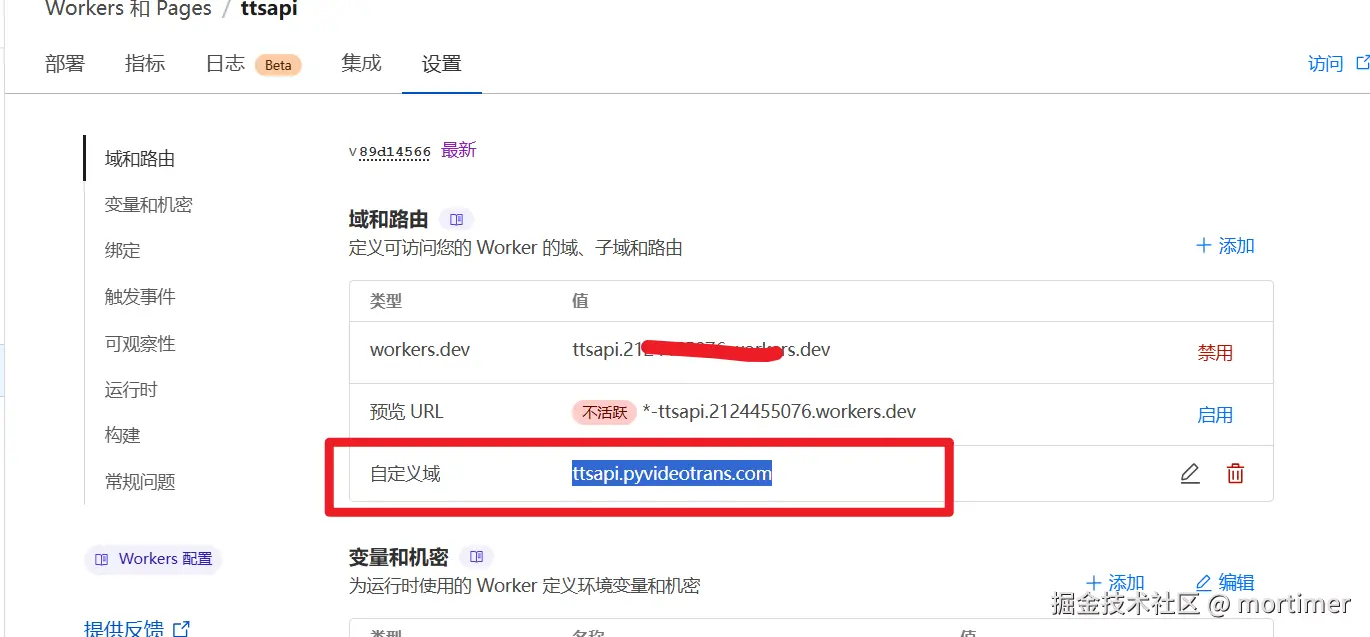

As shown below, after adding.

Here shows your added custom domain.

Use in Video Translation Software

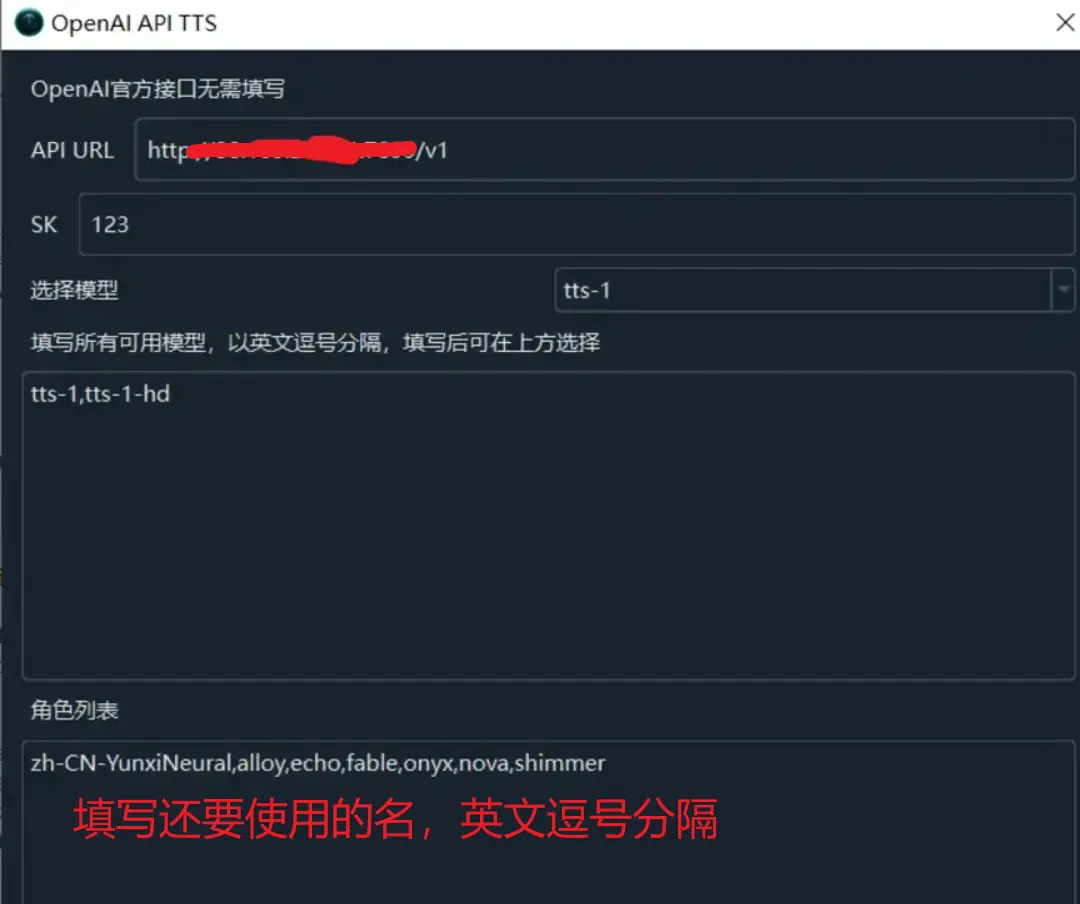

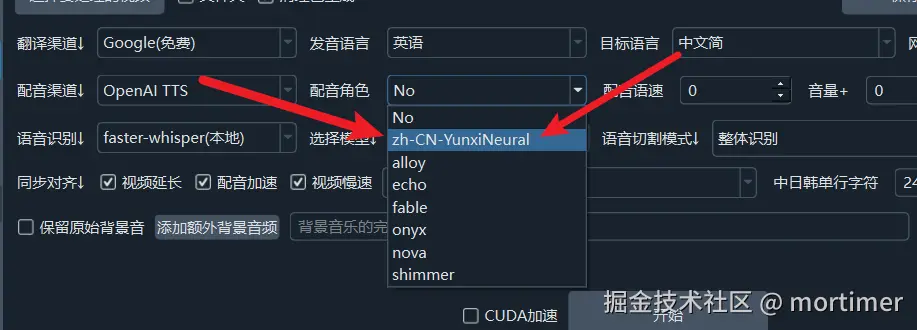

Please upgrade the software to v3.40 to use this feature. Download the upgrade at https://pyvideotrans.com/downpackage.

Open the menu, go to "TTS Settings" -> "OpenAI TTS". Change the interface address to https://replace-with-your-custom-domain/v1, enter your API_KEY in "SK". In the role list, enter the roles you want to use, separated by commas.

Available Voices

The following is a list of available voices. Note that the text language and voice must match.

Chinese voices:

zh-HK-HiuGaaiNeural

zh-HK-HiuMaanNeural

zh-HK-WanLungNeural

zh-CN-XiaoxiaoNeural

zh-CN-XiaoyiNeural

zh-CN-YunjianNeural

zh-CN-YunxiNeural

zh-CN-YunxiaNeural

zh-CN-YunyangNeural

zh-CN-liaoning-XiaobeiNeural

zh-TW-HsiaoChenNeural

zh-TW-YunJheNeural

zh-TW-HsiaoYuNeural

zh-CN-shaanxi-XiaoniNeural

English voices:

en-AU-NatashaNeural

en-AU-WilliamNeural

en-CA-ClaraNeural

en-CA-LiamNeural

en-HK-SamNeural

en-HK-YanNeural

en-IN-NeerjaExpressiveNeural

en-IN-NeerjaNeural

en-IN-PrabhatNeural

en-IE-ConnorNeural

en-IE-EmilyNeural

en-KE-AsiliaNeural

en-KE-ChilembaNeural

en-NZ-MitchellNeural

en-NZ-MollyNeural

en-NG-AbeoNeural

en-NG-EzinneNeural

en-PH-JamesNeural

en-PH-RosaNeural

en-SG-LunaNeural

en-SG-WayneNeural

en-ZA-LeahNeural

en-ZA-LukeNeural

en-TZ-ElimuNeural

en-TZ-ImaniNeural

en-GB-LibbyNeural

en-GB-MaisieNeural

en-GB-RyanNeural

en-GB-SoniaNeural

en-GB-ThomasNeural

en-US-AvaMultilingualNeural

en-US-AndrewMultilingualNeural

en-US-EmmaMultilingualNeural

en-US-BrianMultilingualNeural

en-US-AvaNeural

en-US-AndrewNeural

en-US-EmmaNeural

en-US-BrianNeural

en-US-AnaNeural

en-US-AriaNeural

en-US-ChristopherNeural

en-US-EricNeural

en-US-GuyNeural

en-US-JennyNeural

en-US-MichelleNeural

en-US-RogerNeural

en-US-SteffanNeural

Japanese voices:

ja-JP-KeitaNeural

ja-JP-NanamiNeural

Korean voices:

ko-KR-HyunsuNeural

ko-KR-InJoonNeural

ko-KR-SunHiNeural

French voices:

fr-BE-CharlineNeural

fr-BE-GerardNeural

fr-CA-ThierryNeural

fr-CA-AntoineNeural

fr-CA-JeanNeural

fr-CA-SylvieNeural

fr-FR-VivienneMultilingualNeural

fr-FR-RemyMultilingualNeural

fr-FR-DeniseNeural

fr-FR-EloiseNeural

fr-FR-HenriNeural

fr-CH-ArianeNeural

fr-CH-FabriceNeural

German voices:

de-AT-IngridNeural

de-AT-JonasNeural

de-DE-SeraphinaMultilingualNeural

de-DE-FlorianMultilingualNeural

de-DE-AmalaNeural

de-DE-ConradNeural

de-DE-KatjaNeural

de-DE-KillianNeural

de-CH-JanNeural

de-CH-LeniNeural

Spanish voices:

es-AR-ElenaNeural

es-AR-TomasNeural

es-BO-MarceloNeural

es-BO-SofiaNeural

es-CL-CatalinaNeural

es-CL-LorenzoNeural

es-ES-XimenaNeural

es-CO-GonzaloNeural

es-CO-SalomeNeural

es-CR-JuanNeural

es-CR-MariaNeural

es-CU-BelkysNeural

es-CU-ManuelNeural

es-DO-EmilioNeural

es-DO-RamonaNeural

es-EC-AndreaNeural

es-EC-LuisNeural

es-SV-LorenaNeural

es-SV-RodrigoNeural

es-GQ-JavierNeural

es-GQ-TeresaNeural

es-GT-AndresNeural

es-GT-MartaNeural

es-HN-CarlosNeural

es-HN-KarlaNeural

es-MX-DaliaNeural

es-MX-JorgeNeural

es-NI-FedericoNeural

es-NI-YolandaNeural

es-PA-MargaritaNeural

es-PA-RobertoNeural

es-PY-MarioNeural

es-PY-TaniaNeural

es-PE-AlexNeural

es-PE-CamilaNeural

es-PR-KarinaNeural

es-PR-VictorNeural

es-ES-AlvaroNeural

es-ES-ElviraNeural

es-US-AlonsoNeural

es-US-PalomaNeural

es-UY-MateoNeural

es-UY-ValentinaNeural

es-VE-PaolaNeural

es-VE-SebastianNeural

Arabic voices:

ar-DZ-AminaNeural

ar-DZ-IsmaelNeural

ar-BH-AliNeural

ar-BH-LailaNeural

ar-EG-SalmaNeural

ar-EG-ShakirNeural

ar-IQ-BasselNeural

ar-IQ-RanaNeural

ar-JO-SanaNeural

ar-JO-TaimNeural

ar-KW-FahedNeural

ar-KW-NouraNeural

ar-LB-LaylaNeural

ar-LB-RamiNeural

ar-LY-ImanNeural

ar-LY-OmarNeural

ar-MA-JamalNeural

ar-MA-MounaNeural

ar-OM-AbdullahNeural

ar-OM-AyshaNeural

ar-QA-AmalNeural

ar-QA-MoazNeural

ar-SA-HamedNeural

ar-SA-ZariyahNeural

ar-SY-AmanyNeural

ar-SY-LaithNeural

ar-TN-HediNeural

ar-TN-ReemNeural

ar-AE-FatimaNeural

ar-AE-HamdanNeural

ar-YE-MaryamNeural

ar-YE-SalehNeural

Bengali voices:

bn-BD-NabanitaNeural

bn-BD-PradeepNeural

bn-IN-BashkarNeural

bn-IN-TanishaaNeural

Czech voices:

cs-CZ-AntoninNeural

cs-CZ-VlastaNeural

Dutch voices:

nl-BE-ArnaudNeural

nl-BE-DenaNeural

nl-NL-ColetteNeural

nl-NL-FennaNeural

nl-NL-MaartenNeural

Hebrew voices:

he-IL-AvriNeural

he-IL-HilaNeural

Hindi voices:

hi-IN-MadhurNeural

hi-IN-SwaraNeural

Hungarian voices:

hu-HU-NoemiNeural

hu-HU-TamasNeural

Indonesian voices:

id-ID-ArdiNeural

id-ID-GadisNeural

Italian voices:

it-IT-GiuseppeNeural

it-IT-DiegoNeural

it-IT-ElsaNeural

it-IT-IsabellaNeural

Kazakh voices:

kk-KZ-AigulNeural

kk-KZ-DauletNeural

Malay voices:

ms-MY-OsmanNeural

ms-MY-YasminNeural

Polish voices:

pl-PL-MarekNeural

pl-PL-ZofiaNeural

Portuguese voices:

pt-BR-ThalitaNeural

pt-BR-AntonioNeural

pt-BR-FranciscaNeural

pt-PT-DuarteNeural

pt-PT-RaquelNeural

Russian voices:

ru-RU-DmitryNeural

ru-RU-SvetlanaNeural

Swahili voices:

sw-KE-RafikiNeural

sw-KE-ZuriNeural

sw-TZ-DaudiNeural

sw-TZ-RehemaNeural

Thai voices:

th-TH-NiwatNeural

th-TH-PremwadeeNeural

Turkish voices:

tr-TR-AhmetNeural

tr-TR-EmelNeural

Ukrainian voices:

uk-UA-OstapNeural

uk-UA-PolinaNeural

Vietnamese voices:

vi-VN-HoaiMyNeural

vi-VN-NamMinhNeuralTest with OpenAI SDK

This interface is compatible with OpenAI, so you can test it directly with the OpenAI SDK, as in the following Python code.

import logging

from openai import OpenAI

import json

import httpx

api_key = 'adgas213423235saeg' # Replace with your actual API key

base_url = 'https://xxx.xxx.com/v1' # Replace with your custom domain, default add /v1

client = OpenAI(

api_key=api_key,

base_url=base_url

)

data = {

'model': 'tts-1',

'input': 'Hello, dear friends',

'voice': 'zh-CN-YunjianNeural',

'response_format': 'mp3',

'speed': 1.0,

}

try:

response = client.audio.speech.create(

**data

)

with open('./test_openai.mp3', 'wb') as f:

f.write(response.content)

print("MP3 file saved successfully to test_openai.mp3")

except Exception as e:

print(f"An error occurred: {e}")Build the Web Interface

Now that we have the API, how to build the web page?

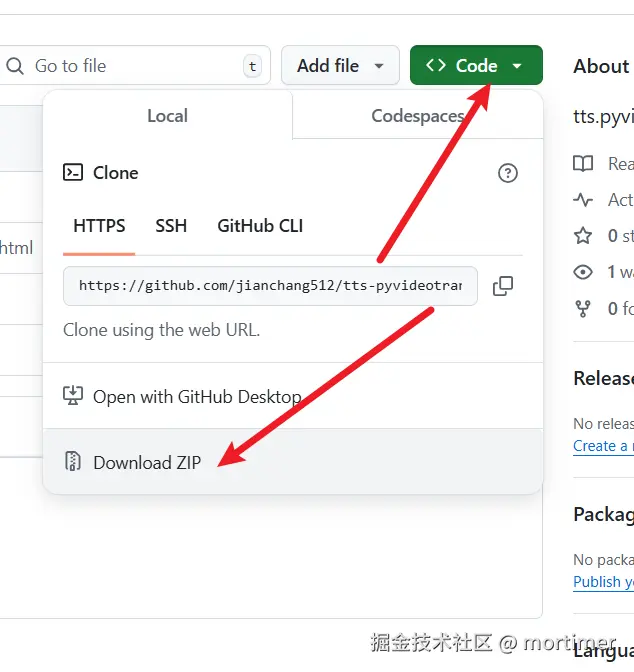

Go to the project: https://github.com/jianchang512/tts-pyvideotrans Download and extract it, then place the three files index.html, output.css, and vue.js in a server directory and access index.html.

Note: In index.html, search for https://ttsapi.pyvideotrans.com and replace it with your custom domain deployed on Cloudflare, otherwise it won't work.

Important: Delete the 4 script lines at the bottom. These are for statistics and ads on the original site and are included because the project is directly used there.