Just yesterday, I encountered a textbook database problem. I needed to drop an index on a large table with 60 million rows, thinking a simple ALTER TABLE command would suffice. Little did I know it would kick off a thrilling, hours-long troubleshooting and recovery journey.

The entire process involved a series of issues: DDL hanging, fatal flaws in the table engine, table corruption and repair, and abnormal index statistics. I've documented the entire process for future reference and to help anyone who might face similar challenges.

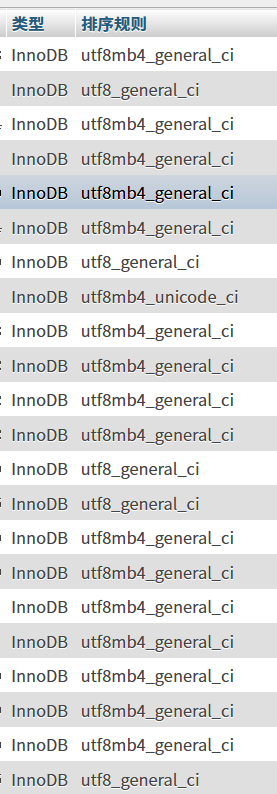

The protagonist of the story: A table named wa_xinghao with over 60 million rows.

Act 1: Undercurrents in Calm Waters—Why Did ALTER TABLE Hang?

It all started with this command:

ALTER TABLE `wa_xinghao` DROP INDEX `product_id-s1`;After execution, the terminal hung without any response. Meanwhile, I noticed that all queries related to this table in the application became extremely slow or even timed out. My first thought: It's locked!

I immediately opened a new database connection and ran SHOW FULL PROCESSLIST; for diagnosis.

Usually, ALTER hangs most commonly due to Waiting for table metadata lock, meaning another long transaction or query is holding the table's metadata lock. But this time, the State I saw was:

copy to tmp table

This state sent a chill down my spine. It meant MySQL was performing the most primitive and time-consuming table rebuild operation: creating a temporary table with the new structure (without that index) and copying all 60 million rows one by one. During this process, the original table would be locked for a long time. For 60 million rows, this was undoubtedly a disaster.

Act 2: Digging Deeper—Why COPY Instead of INPLACE?

I was using MySQL 5.7, which has long supported Online DDL (INPLACE algorithm) for DROP INDEX, so it shouldn't have fallen back to COPY. To verify, I tried forcing the algorithm:

ALTER TABLE `wa_xinghao` DROP INDEX `product_id-s1`, ALGORITHM=INPLACE, LOCK=NONE;Result: MySQL mercilessly gave me an error: ERROR 1845 (0A000): ALGORITHM=INPLACE is not supported for this operation. Try ALGORITHM=COPY.

Now the problem was clear: For some reason, MySQL deemed INPLACE operations unsupported on this table. I immediately ran SHOW CREATE TABLE wa_xinghao; to check the table structure, and the truth was revealed:

) ENGINE=MyISAM ...Root cause found! It was the MyISAM storage engine. I had assumed it was InnoDB because the entire database appeared to be InnoDB at a glance!

MyISAM is an old, non-transactional engine with many fatal flaws, one of which is very poor support for DDL operations. It hardly has any concept of "online" operations; most ALTER operations lead to table locking and rebuilds.

Act 3: One Problem After Another—Table Corruption and the Long Repair

After identifying the issue, I couldn't tolerate the prolonged table lock and made what now seems a very risky decision: I KILLed the ALTER process that was in copy to tmp table.

After a brief calm, a new nightmare began. When I tried any operation on this table (even SELECT), I received a new error:

ERROR 1194 (HY000): Table 'wa_xinghao' is marked as crashed and should be repaired

Yes, by forcibly interrupting the modification of the underlying files, the MyISAM table "lived up to expectations" and got corrupted. This is typical of MyISAM's non-crash-safe nature.

The only way out was to repair it:

REPAIR TABLE `wa_xinghao`;Then, I entered another round of long waiting. Through SHOW PROCESSLIST;, I saw the state change to:

Repair by sorting

This state indicated MySQL was rebuilding the index file (.MYI) by sorting. For 60 million rows and multiple indexes, this was an extremely CPU- and I/O-intensive process. All I could do was wait patiently and pray the server wouldn't be overwhelmed. This time, I absolutely dared not KILL it again.

Act 4: A "Little Surprise" After the Storm—Weird Index Cardinality

After hours of agony, the table was finally repaired successfully!

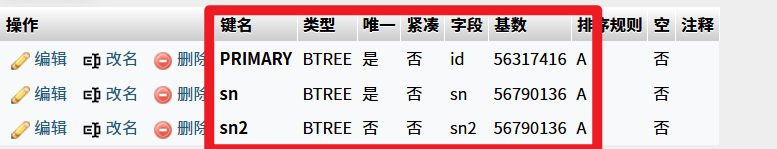

While checking the table structure, I incidentally noticed an interesting phenomenon:

As shown above, the cardinality of the sn and sn2 indexes was actually larger than that of the primary key PRIMARY! This is logically impossible because the primary key is absolutely unique, and its cardinality should equal the total row count.

Reasons revealed:

- Cardinality is an estimate: For performance, MySQL estimates cardinality through random sampling, which inherently has errors.

- Outdated statistics: The main reason is that after the chaotic "crash-repair" process, the table's statistics weren't updated in time, leading to stale and inaccurate data.

The solution was simple: manually force an update of the statistics:

ANALYZE TABLE `wa_xinghao`;After execution, the cardinality returned to normal.

Final Chapter: Reborn from the Ashes—Embracing InnoDB

This thrilling experience made me determined to completely say goodbye to MyISAM. My ultimate goal was: While deleting the redundant index, convert the table engine to InnoDB.

Directly executing ALTER TABLE ... ENGINE=InnoDB would also trigger a table rebuild with locking, which was not acceptable. The best practices are two-fold:

Option 1: The King of Online Operations pt-online-schema-change

This tool from Percona Toolkit is the industry standard for handling large table DDL. By creating a "ghost table" and using triggers to sync incremental data, it can complete table structure changes with minimal locking and business impact.

# One command to complete both "drop index" and "convert engine" tasks

pt-online-schema-change \

--alter "DROP INDEX `product_id-s1`, ENGINE=InnoDB" \

h=your_host,D=your_database,t=wa_xinghao,u=your_user,p=your_password \

--executeOption 2: Manual Offline Operation (Requires Maintenance Window)

If the business allows a brief maintenance window, adopt the "migrate data first, build indexes later" approach for high speed.

- Create a new InnoDB table

wa_xinghao_newwithout indexes. - Quickly export data to a file:

SELECT ... INTO OUTFILE ... FROM wa_xinghao; - Quickly load data into the new table:

LOAD DATA INFILE ... INTO TABLE wa_xinghao_new; - Create required indexes online on the new table:

ALTER TABLE wa_xinghao_new ADD INDEX ...; - During the maintenance window, atomically rename:

RENAME TABLE wa_xinghao TO wa_xinghao_old, wa_xinghao_new TO wa_xinghao;

Reflection

Although the journey was rough, the gains were immense. I've summarized the following key lessons:

- Engine is fundamental: Before any operation on a large table, first check the

ENGINE! Different engines behave vastly differently. - Say goodbye to

MyISAM: For any business with write, update, and high availability requirements, immediately migrateMyISAMtoInnoDB.MyISAM's non-crash-safe nature and table-level locks are ticking time bombs in the system. - Respect the

KILLcommand: Do not lightlyKILLa DDL process performing write operations on aMyISAMtable, or you'll likely end up with a corrupted table. - Leverage professional tools: For large table DDL,

pt-online-schema-changeorgh-ostare not "options" but "necessities." - Understand state information: The

StateinSHOW PROCESSLISTis a golden key for troubleshooting. Bothcopy to tmp tableandRepair by sortingtell us what's happening at the底层.